Table of Contents

In the rapidly evolving world of artificial intelligence, fine-tuning large language models (LLMs) is a crucial step in adapting pre-trained models to specific tasks or domains. While LLMs like Llama, Mistral, and Gemma have set the standard for AI performance, the process of fine-tuning these models often presents significant challenges—especially when it comes to time, resources, and efficiency. As the demand for more customized and optimized AI models grows, tools like Unsloth are emerging to streamline and enhance the fine-tuning process, making it a top priority for researchers, developers, and businesses alike.

What is Unsloth AI?

Unsloth is a cutting-edge platform designed to accelerate the fine-tuning of large language models (LLMs) such as Llama-3, Mistral, Phi-3, and Gemma. It was built by two brothers — Daniel Han, a software and data specialist, and Michael Han, a designer and product engineer. Together, they’ve created a platform that empowers developers and researchers to fine-tune LLMs with ease, speed, and efficiency. By optimizing the process, Unsloth makes it possible to fine-tune these models 2x faster, with 70% less memory usage, and no degradation in accuracy. Whether you’re a developer, researcher, or AI enthusiast, it simplifies the complex task of fine-tuning LLMs, allowing you to create custom models with enhanced performance and efficiency.

What is Fine-Tuning?

Fine-tuning is the process of adapting a pre-trained LLM to specific tasks or domains by updating its internal parameters. Unlike general prompt engineering, which relies on crafting input queries to guide model behavior, fine-tuning modifies the “brains” of the LLM itself, allowing it to learn new skills, understand specialized contexts, or perform domain-specific tasks with greater accuracy. This method involves a supervised learning approach where labeled data specific to the task is used to train the model further. The goal is to create a task-optimized LLM that delivers more reliable and efficient performance compared to the original pre-trained version.

For instance, a general-purpose LLM like Llama-2 can be fine-tuned to excel in legal document summarization or medical diagnosis support by training it on relevant datasets. This customization ensures that the model not only retains its broad understanding of language but also acquires specialized skills that make it more effective for specific applications.

Why Is Fine-Tuning Important?

Fine-tuning bridges the gap between generic AI capabilities and real-world application requirements. While pre-trained models like Llama or Mistral are powerful, they may fall short in understanding nuanced or domain-specific questions without contextual fine-tuning.

For example, a law firm handling thousands of legal documents may need an LLM that excels in document summarization and legal terminology. Fine-tuning transforms a general-purpose model into a specialized tool that meets such specific needs. Similarly, fine-tuning can help models handle tasks like sentiment analysis, code generation, or personalized chatbot responses more effectively than relying solely on prompt engineering.

In an era where industries demand bespoke AI solutions, fine-tuning ensures that LLMs align more closely with organizational goals, data, and operational requirements.

Benefits of Fine-Tuning

- Improved Performance

Fine-tuning enhances a model’s ability to deliver accurate and context-specific outputs. Studies, such as those in the Instruct-GPT paper, demonstrate that fine-tuned models significantly outperform prompt-engineered counterparts in various tasks, earning higher evaluation scores for relevance and coherence. - Customization for Specific Use Cases

Fine-tuning allows organizations to create models tailored to their unique requirements. By training an LLM on proprietary datasets, businesses can achieve models optimized for their niche—whether it’s medical diagnoses, conversational AI, or domain-specific research. - Efficient Resource Utilization

Fine-tuned models are often more efficient to deploy. For example, techniques like LoRA (Low-Rank Adaptation) enable lightweight fine-tuning, making deployment faster and reducing computational demands compared to full-scale LLMs. - Scalability and Flexibility

With a fine-tuned LLM, businesses can scale their solutions without requiring significant overhauls. These models can adapt quickly to changes in the domain or task requirements, ensuring long-term usability. - Competitive Advantage

A fine-tuned, in-house LLM trained on unique datasets can be a significant asset for organizations. It not only boosts operational efficiency but also serves as a selling point to clients, showcasing the use of proprietary AI tools for improved outcomes.

Fine-tuning thus transforms powerful but generalized LLMs into task-specific experts, empowering businesses to leverage AI more effectively across diverse domains.

Unsloth’s Role in Fine-Tuning LLMs

Unsloth is a game-changer in the world of fine-tuning (LLMs), offering unmatched efficiency, versatility, and ease of use. Whether you’re a beginner experimenting with fine-tuning or a seasoned developer tackling large-scale AI projects, Unsloth provides a comprehensive suite of tools to meet your needs. Here’s why Unsloth stands out:

Efficiency

Unsloth stands out by delivering exceptional efficiency in fine-tuning large language models. With a process that is 2x faster than traditional methods, it ensures that developers can achieve results in a fraction of the time. Moreover, Unsloth’s fine-tuning techniques require 70% less memory, making it ideal for resource-conscious users looking to maximize performance without compromising on quality.

Scalability

For users handling large-scale AI projects, Unsloth offers unmatched scalability. It supports setups ranging from single GPUs to configurations with up to 8 GPUs and even multi-node environments. This flexibility makes it suitable for both small teams and enterprise-level deployments, ensuring that projects of any size can be executed efficiently.

Versitality

Unsloth provides robust support for a wide range of LLMs, including Llama (versions 1, 2, and 3), Mistral, Gemma, and Phi-3. Its adaptability allows developers to fine-tune models for various tasks, whether it’s language learning, summarization, conversational AI, or instruction-following. This versatility makes it a go-to solution for diverse AI applications.

Ease of Use

Designed with user-friendliness in mind, Unsloth is open-source and easy to install. It can be set up locally or integrated with platforms like Google Colab to take advantage of free GPU resources. Comprehensive documentation and step-by-step guides are available to assist users throughout the fine-tuning process, making it accessible even to those new to LLMs.

Integration with Third-Party Tools

Unsloth enhances its functionality by allowing seamless integration with third-party tools. For instance, its compatibility with Google Colab provides users with powerful training capabilities, enabling them to optimize their workflows with minimal effort. This integration ensures that Unsloth not only simplifies fine-tuning but also extends its utility for advanced AI development.

Getting Started with Unsloth

Using Unsloth is simple, and most users choose to run it through Google Colab, which provides free GPU resources for training. Here’s how you can get started:

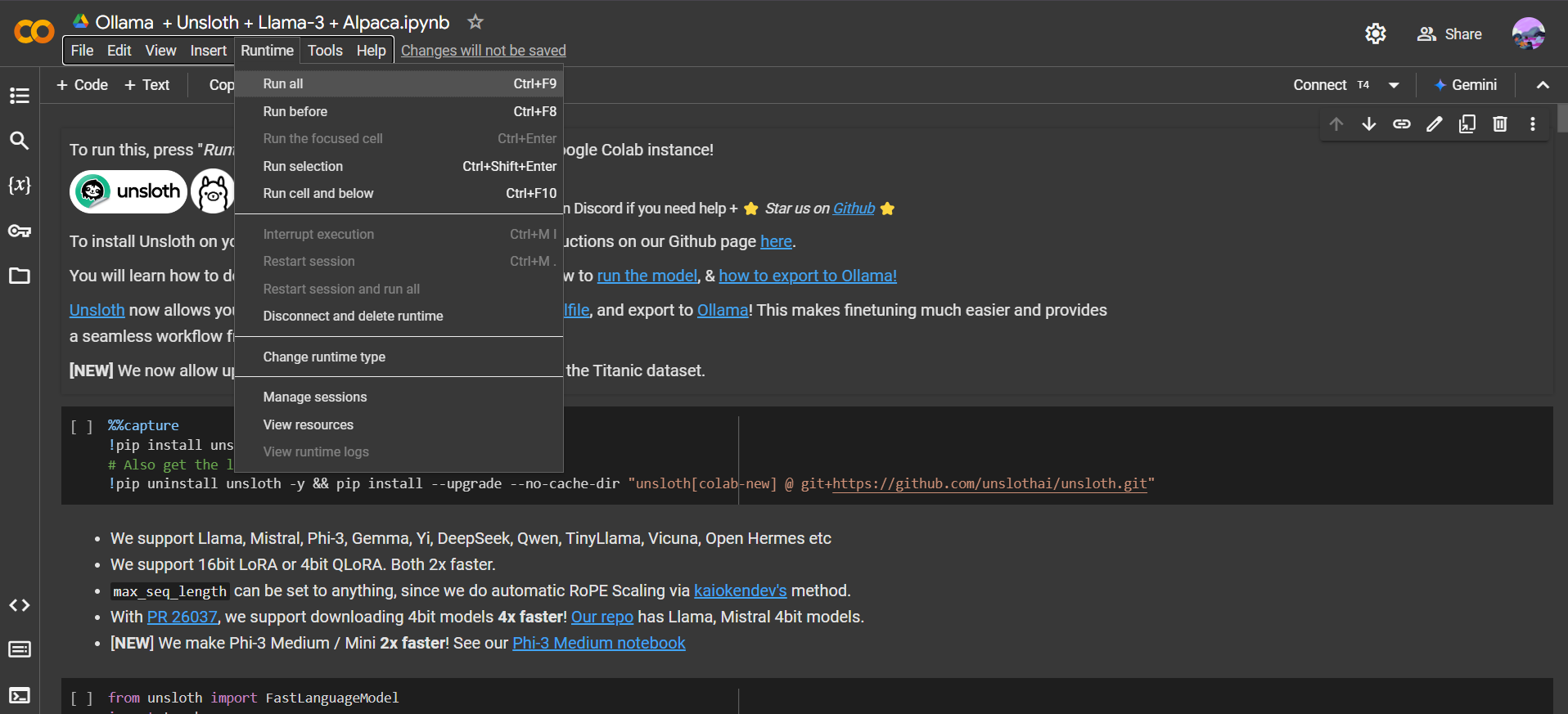

To install Unsloth on Google Colab:

- Open this Notebook file on Google Colab.

- From the Runtime dropdown go to Change runtime type and select T4 (if not already set to T4).

- From Runtime dropdown select Run all.

You can access all Unsloth notebooks via the provided links in the documentation. The one we used for installing Unsloth is Ollama. Follow the step-by-step guide to fine-tune Llama-3 and export your model to Ollama or other platforms. Unsloth’s efficient fine-tuning tools allow you to create and use your own custom models with ease.

To learn more about how to update/install Unsloth using

piporcondalocally, check how this comprehensive installation guide.

Unsloth Pricing Plans

Unsloth offers flexible pricing options to cater to different use cases:

- Free (Open-Source): This version supports Llama 1, 2, 3, Mistral, and Gemma, with single GPU support and LoRA training options (4-bit and 16-bit). It’s perfect for getting started and experimenting with LLM fine-tuning.

- Unsloth Pro: Ideal for those who need more power, this plan unlocks multi-GPU support, providing 2.5x faster training and 20% less VRAM usage compared to the open-source version. It’s designed for serious users who want to scale up.

- Unsloth Enterprise: For enterprise users, this plan offers up to 30x faster training, multi-node support, and 30% more accuracy. It includes all the Pro features and is designed for full-scale, production-level training and inference.

Conclusion

Unsloth has emerged as a powerful solution to address these challenges, providing a platform that accelerates the fine-tuning of large language models without compromising on accuracy. By offering faster training times, reduced memory usage, and scalability options for various use cases, Unsloth makes it easier than ever for users to unlock the full potential of their LLMs. Whether you’re an individual developer or part of a large enterprise, Unsloth’s intuitive, open-source tools can help you build and deploy customized models efficiently, paving the way for more innovative AI applications.

Related Reading:

- StarCoder: LLM for Code — A Comprehensive Guide

- What is RAG in AI – A Comprehensive Guide

- What is Generative AI, ChatGPT, and DALL-E? Explained

- How to Use the ChatGPT API for Building AI Apps: Beginners Guide

FAQs

What is Unsloth?

Unsloth is a tool designed to simplify and accelerate the fine-tuning of large language models (LLMs), helping developers and organizations optimize models for specific tasks or domains with minimal effort.

Is Unsloth free?

Unsloth offers various pricing plans, including free and premium tiers, depending on the features and scale of usage.

Why is Unsloth fast?

Unsloth is fast because it leverages cutting-edge optimization techniques, lightweight fine-tuning methods like LoRA, and an intuitive interface that minimizes setup time and resource overhead.

What is the alternative to Unsloth?

Alternatives to Unsloth include other fine-tuning tools like Hugging Face Transformers, OpenAI’s fine-tuning API, and TensorFlow. However, these alternatives may require more manual setup or technical expertise.