Table of Contents

Deep learning has transformed the way machines analyze and interpret data, unlocking possibilities that once seemed like science fiction—such as generating realistic images, translating languages, and detecting anomalies in real time. Behind these powerful capabilities are complex underlying processes that allow models to understand, compress, and represent large amounts of data in an efficient way. A concept central to many deep learning tasks and generative applications is latent space, an abstract, compressed representation that lies at the heart of deep learning’s data-handling capabilities.

To learn more about Deep Learning basics, check out these official guides on AWS and Oracle.

This article delves into latent space, a core concept that enables deep learning models to recognize patterns and simplify data, empowering them to perform extraordinary tasks across a range of fields, from image generation to natural language processing. We’ll explore the role of latent space in various types of neural networks, the reasons it is essential in machine learning, practical visualization techniques, and real-world applications. Whether you’re new to AI or a seasoned professional, this article provides a comprehensive look into one of deep learning’s most foundational concepts.

What is Latent Space?

To visualize latent space, imagine how people recognize different types of animals. When we see animals like cats, dogs, or horses, we don’t memorize every detail of each species but instead create a mental template based on general features: cats are small, have whiskers and retractable claws; dogs vary in size but often have floppy ears and wagging tails; horses are large, have long legs, and distinct mane structures.

If we encounter a new animal that is small (and cute) with whiskers and retractable claws, our mental representation helps us classify it as a cat, even without every specific detail. Similarly, in latent space, deep learning models map animals with shared features closer together, so the model can classify a new animal based on these learned patterns.

In essence, latent space serves as a blueprint of input data, retaining only the most defining characteristics and reducing the computational complexity of high-dimensional data. By mapping data to latent space, deep learning models can identify underlying structures that may not be immediately visible, allowing them to perform tasks with greater efficiency and accuracy.

Why Latent Space Matters in Deep Learning?

The primary objective of deep learning is to transform raw data—like the pixel values of an image—into suitable internal representations or feature vectors from which the learning subsystem, often a classifier, can detect or classify patterns. This is where latent space becomes essential; the internal representations (or the extracted features) created directly constitute what we refer to as the latent space.

A deep learning model takes raw data as input and outputs discriminative features that lie in a lower-dimensional space called latent space. These features allow the model to tackle various tasks such as classification, regression, and reconstruction. Encoding data in a low-dimensional latent space before tasks like classification or regression addresses the need for data compression, especially with high-dimensional input. For instance, in an image classification task, input data could reach hundreds of thousands of pixels. Encoding this data into latent space enables the system to capture useful patterns without processing each pixel individually, which would be computationally prohibitive.

Historical Context of Latent Space in Machine Learning

The term latent space was first coined within artificial intelligence in the context of unsupervised learning and neural networks. Researchers initially introduced latent space to describe the abstract, lower-dimensional representations where neural networks could encode meaningful patterns in data without explicit labels. By mapping data into this latent space, these early models learned to capture hidden structures that could not be directly observed in raw input data, making latent space a foundational concept for efficiently handling high-dimensional data in AI.

Early Dimensionality Reduction Techniques: PCA and LDA

Latent space has its roots in dimensionality reduction and feature extraction techniques. Principal Component Analysis (PCA), developed in the early 20th century, was one of the first approaches to reduce the dimensions of high-dimensional data by identifying and retaining only the principal components, or directions of maximum variance. By transforming the data along these key directions, PCA created simplified, lower-dimensional representations that allowed for easier analysis. The success of PCA illustrated the potential of compressed, meaningful data representations, paving the way for modern latent spaces.

Similarly, Linear Discriminant Analysis (LDA), another early technique, sought to reduce data dimensions for classification tasks. Unlike PCA, LDA is supervised and maximizes the separability between different classes by finding a linear combination of features that differentiates them. By mapping data to a new, lower-dimensional space with clearly defined clusters, LDA enabled easier classification and analysis. Together, PCA and LDA provided early evidence that data could be represented and interpreted in reduced dimensions, helping researchers recognize the potential of latent spaces in simplifying complex information.

The Shift to Neural Network-Based Representations

With advancements in machine learning, researchers began developing neural network-based methods to capture non-linear relationships in data—an area where traditional methods like PCA and LDA were limited. This shift led to the emergence of autoencoders, a type of neural network that learns efficient, compressed representations of data by mapping it into latent space using an encoder-decoder structure.

Autoencoders consist of two main components:

- Encoder: Compresses input data into a latent representation, reducing its dimensionality.

- Decoder: Reconstructs the original data from the latent representation, ensuring the compressed data retains critical features.

Autoencoders introduced the concept of an adaptable, data-specific latent space that could learn complex, non-linear patterns, making them ideal for tasks such as noise reduction, anomaly detection, and dimensionality reduction.

Variational Autoencoders (VAEs) expanded on this idea by introducing probabilistic elements into latent space. Unlike traditional autoencoders, VAEs encode data as a probability distribution over the latent space, typically a Gaussian, from which new samples can be drawn. This probabilistic approach made it possible for VAEs to generate new, unique data points, marking a significant step forward in generative modeling and data synthesis.

Latent Space in Generative Adversarial Networks

The advent of Generative Adversarial Networks (GANs) transformed latent space into a foundation for data generation. GANs employ a generator-discriminator structure, where the generator takes random vectors from latent space and maps them to realistic data points, while the discriminator learns to distinguish between real and generated samples. This adversarial setup allows GANs to generate highly realistic outputs, with latent space acting as a “creative” space from which new data can be sampled and generated.

Latent space in GANs enables diverse applications, such as image synthesis, video generation, and style transfer. The flexibility of latent space in GANs allows for fine control over generated outputs: slight adjustments to a vector in latent space can result in changes to specific features in the output, such as an object’s color, size, or shape. This control has driven GANs’ popularity in areas like virtual reality, entertainment, and art.

Latent Space in Modern Deep Learning Architectures

Today, latent space is integral to a wide range of AI models beyond unsupervised learning, including Convolutional Neural Networks (CNNs) for image classification, Transformers in NLP, and recommendation systems in e-commerce. CNNs leverage latent space to capture high-level patterns in images, enabling tasks like object detection and classification. Transformers use attention mechanisms to create high-dimensional latent representations of text, which allows models to learn context and relationships between language elements effectively. Latent space has grown from a simple dimensionality reduction tool into a core component of many deep learning architectures, supporting efficient and effective data interpretation across disciplines.

Applications of Latent Space in Deep Learning

Latent space representations have become essential in many areas of deep learning, enabling models to handle complex data more efficiently and unlock capabilities in a variety of applications. Here are some key ways latent space is leveraged in modern machine learning:

1. Data Compression

One of the most practical uses of latent space is in data compression. By mapping high-dimensional input data into a lower-dimensional latent representation, models can capture the essential features of the data while significantly reducing its size. This compressed form is particularly useful in resource-constrained environments like mobile devices and IoT applications, where storage and processing power are limited. For example, autoencoders trained on images can reduce the storage requirements of high-resolution images while preserving key details, allowing efficient storage and retrieval in compressed formats.

2. Anomaly Detection

Latent space representations make it possible to detect anomalies by highlighting data points that deviate significantly from learned patterns. In cybersecurity, for instance, latent space can help identify unusual network activity or malicious transactions by mapping typical behavior close together while pushing outliers further away. Similarly, in manufacturing and quality control, latent space can be used to detect defects or faulty products by identifying patterns that don’t match standard configurations. By isolating outliers in latent space, anomaly detection systems can automate monitoring tasks, reduce human oversight, and enhance safety.

3. Data Generation

Latent space is crucial in generative modeling, where new data samples are created by sampling from the latent space. Models like Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) leverage this capability to generate realistic images, videos, and sounds. This generative approach is widely applied in creative fields such as art, music, and game development, where artists and designers can use these models to produce unique content or variations. Latent space also supports synthetic data generation for training purposes, where artificial datasets are created to improve model performance in scenarios with limited real-world data.

4. Transfer Learning

Latent space representations allow for transfer learning, where a model trained on one task can transfer its learned representations to a different but related task. For instance, a model trained on object recognition in images might use its learned latent features (such as edges, shapes, and textures) to improve performance on a new, specific task, like facial recognition. This process reduces training times and improves model accuracy since the model doesn’t need to learn from scratch. Transfer learning is widely used in fields like natural language processing (NLP) and computer vision, where complex patterns learned from large datasets can benefit smaller, specialized tasks.

Latent space has thus become indispensable in a wide array of applications, from enhancing efficiency and generating new data to enabling more adaptable and scalable machine learning systems. These capabilities continue to expand as the understanding and utilization of latent space evolve, making it a fundamental aspect of modern AI.

Code Implementation

Here’s a hands-on coding example that demonstrates how to visualize and interact with latent space using an autoencoder trained on the MNIST dataset. This example, found in the ae.py file within the GitHub repository provides a simple, clean codebase to help you understand how autoencoders compress high-dimensional data into a compact latent space.

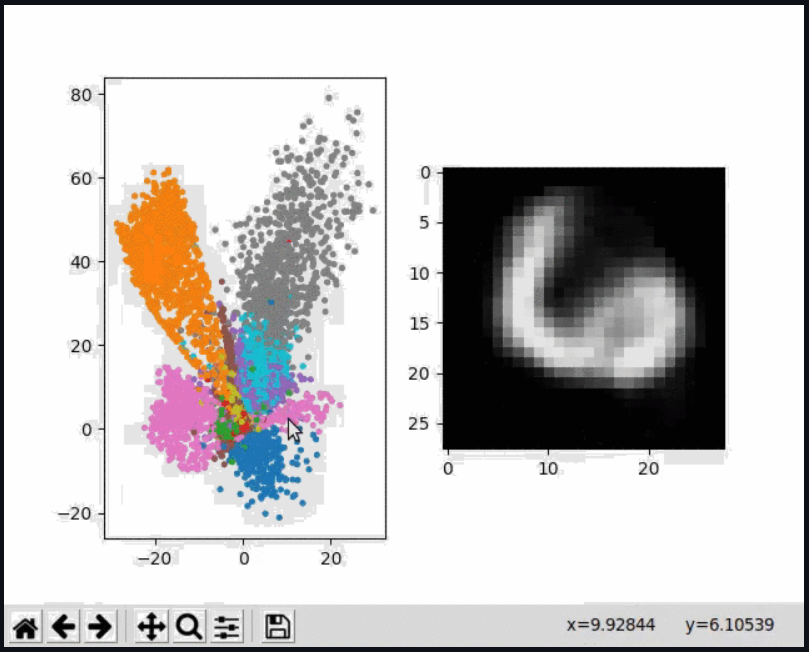

The autoencoder learns to represent 784-dimensional MNIST digit images in a 2-dimensional latent space, creating a clustered view of the digits. The left side of the visualization shows this latent space, where each point corresponds to a digit’s encoded representation. By hovering over different points on the latent space, the model decodes the coordinates back to the original 784-dimensional space, allowing you to see a reconstructed image of the digit on the right side of the screen. It demonstrates how latent space captures meaningful patterns in data and can be easily manipulated to generate new variations of the data points.

How to Use the Code

- Clone the repository from GitHub and navigate to the project directory.

- Install the necessary dependencies: TensorFlow, Matplotlib, NumPy, and Tkinter.

- Run the main file with:

python ae.pyThis script will start the real-time visualization. If you experience any slowness, you can use the precomputed option:

python ae_precomp.pyThe ae_precomp.py file precomputes reachable digit decodings rather than decoding them live during hover, which may offer faster interaction.

With these tools, you can explore the power of latent space, observe how autoencoders learn data features, and see how different regions of the latent space correspond to different digit types.

Conclusion

Latent space is a foundational concept in deep learning that has reshaped how models process, compress, and generate data. By transforming high-dimensional input into a simplified, lower-dimensional representation, latent space enables neural networks to capture essential features and relationships that drive efficient and effective data analysis. Whether used for anomaly detection, data compression, or creative generation of new content, latent space empowers models to perform complex tasks with improved accuracy and flexibility.

From its origins in early dimensionality reduction techniques to its role in modern neural network architectures like autoencoders, GANs, and transformers, latent space has evolved into a critical component of machine learning. Its versatility in representation learning, generative modeling, and transfer learning underscores its importance across various AI fields, including image synthesis, natural language processing, and recommendation systems.

As deep learning continues to advance, the applications of latent space will likely expand even further, offering new ways to interpret, analyze, and manipulate data. Understanding and leveraging latent space remains central to building sophisticated AI models that can tackle the complexities of real-world data, ultimately pushing the boundaries of what artificial intelligence can achieve.

Related Reading:

- How to Learn AI For Free: 2024 Guide From the AI Experts

- What is Cost Function in Machine Learning? – Explained

- How to Build a Sentiment Analysis Tool Using AI

- Best Generative AI Certifications and Courses Online

- Complete Guide to 3D Gaussian Splatting

FAQs

What is the purpose of latent space in deep learning?

Latent space serves as a way for deep learning models to compress high-dimensional data into lower-dimensional representations. This helps models capture essential features, making it easier to identify patterns, perform classifications, generate images, or interpret language. By reducing the complexity of data, latent space enhances the model’s ability to learn and generalize across different tasks.

How does latent space help with image generation?

In image generation, latent space provides a lower-dimensional “blueprint” of features that can be used to create new, realistic images. For instance, Generative Adversarial Networks (GANs) and autoencoders use latent space to encode essential image details, allowing the model to generate new variations by sampling points in the latent space. This technique is commonly used to create synthetic images and styles in applications like art generation and virtual environments.

What are common methods to create latent spaces in deep learning?

Latent spaces are often created through models like autoencoders and variational autoencoders (VAEs). These models compress input data by learning lower-dimensional representations and reconstruct the data from these representations. Techniques like Principal Component Analysis (PCA) are also used for simpler dimensionality reduction tasks, while GANs rely on latent vectors to generate synthetic images based on input distributions.