Table of Contents

Machine learning has transformed how we approach problem-solving in various fields, from healthcare to finance to everyday applications. For developers, a crucial part of building effective machine learning solutions lies in choosing the right libraries and tools. Fortunately, numerous powerful machine learning libraries have emerged, making it easier to implement complex algorithms and manage workflows without building everything from scratch.

Python has become the go-to language for machine learning and AI due to its simplicity, readability, and an extensive ecosystem of libraries that support scientific computing and data manipulation. Its flexibility, along with a strong community, means that Python libraries are continually optimized and widely adopted, allowing developers to quickly turn ideas into impactful machine learning solutions.

In this article, we’ll go over these ten of the best Python libraries for machine learning, complete with examples to showcase how they work:

Whether you’re working on a classification problem, regression, or natural language processing, these libraries offer a range of functionality to help you create accurate and efficient models.

Keras

Keras is a high-level neural network API designed for efficient and accessible development of machine learning and deep learning models. Open-source and built in Python, Keras is known for its intuitive, user-friendly interface, making it suitable for both beginners and professionals. Its modular, flexible architecture allows developers to stack layers, such as convolutional and recurrent layers, to create complex neural network architectures tailored to various applications.

Widely used in fields like image and speech recognition, natural language processing, and recommendation systems, Keras facilitates rapid prototyping and deployment, streamlining workflows by abstracting low-level details. It is compatible with multiple backend engines, including TensorFlow, PyTorch, and Theano, and can also integrate with TensorFlow for GPU acceleration, enabling fast training on large datasets. This scalability and versatility make Keras a valuable tool in both research and industry for deploying models.

Since Keras is included in the TensorFlow package, you can get Keras with the following command:

pip install tensorflowTo import Keras, use:

from tensorflow import kerasOnce imported, you can access all Keras models and classes:

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Flatten, Conv2D

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.datasets import mnist

from tensorflow.keras.utils import to_categoricalBenefits

- User-friendly for easy neural network development.

- Supports CNNs and RNNs out of the box.

- Built-in tools for NLP, vision, and generative AI.

- Compatible across mobile, server, and embedded devices.

- Enables fast experimentation with error tracking.

- Flexible resource use on both CPUs and GPUs.

LightGBM

LightGBM is an efficient, high-performance library for gradient boosting, ideal for large datasets and complex decision trees. It is known for its speed and accuracy, making it ideal for structured data tasks like classification, regression, and ranking. Its histogram-based algorithm is a key innovation, accelerating training and optimizing memory by discretizing continuous features into bins.

One of the library’s strengths is its ability to handle large datasets with low memory consumption, aided by leaf-wise tree growth, which improves accuracy while reducing overfitting. LightGBM also supports parallel training on both CPUs and GPUs, making it efficient for computationally intensive data. Its performance and scalability make it a top choice for real-time prediction tasks and large-scale data analytics, where quick, accurate model training is essential. It is widely applied in areas like fraud detection, customer churn prediction, recommendation systems, and financial modeling.

Since LightGBM is available as a standalone package, you can install it with the following command:

pip install lightgbmTo import LightGBM, use:

import lightgbm as lgbOnce imported, you can access LightGBM’s primary functions and classes:

from lightgbm import LGBMClassifier, LGBMRegressor, Dataset, trainBenefits

- Faster training speed and higher efficiency.

- Lower memory usage.

- Better accuracy with reduced overfitting.

- Support for parallel, distributed, and GPU learning.

- Effective with large-scale data.

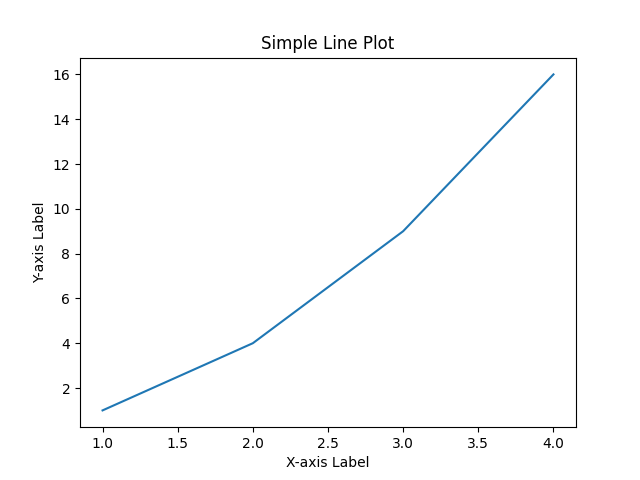

Matplotlib

Matplotlib is a powerful data visualization library for Python that enables the creation of a wide variety of static, animated, and interactive plots. It has become a core tool for data visualization, maintained by an extensive community of developers. Known for its versatility, Matplotlib allows users to produce high-quality figures in multiple formats, making it suitable for everything from quick exploratory plots to detailed, publication-ready visuals. Users can create and adjust figures with fine-grained control over all elements—such as subplots, colors, line styles, and annotations.

Matplotlib seamlessly integrates with other libraries like NumPy, Pandas, and Seaborn, streamlining the data workflow and enabling comprehensive visualization capabilities. In machine learning, Matplotlib is widely used for tasks such as visualizing data distributions, evaluating model performance through plots like ROC curves and confusion matrices, and tracking metrics during training. Its extensive customization and platform independence make it accessible to both beginners and advanced users across diverse applications.

Since Matplotlib is available as a standalone package, you can install it with the following command:

pip install matplotlibTo import Matplotlib, use:

import matplotlib.pyplot as pltOnce imported, you can access Matplotlib’s primary functions and classes:

# Example of a simple line plot

plt.plot([1, 2, 3, 4], [1, 4, 9, 16])

plt.xlabel('X-axis Label')

plt.ylabel('Y-axis Label')

plt.title('Simple Line Plot')

plt.show()

This provides you with essential tools to create a range of visualizations, from simple line graphs to more complex figures, with precise control over plot aesthetics and layout.

Benefits

- Generates high quality customizable charts.

- Cross-platform compatibility across Windows, macOS, and Linux.

- Interactive features, enabling dynamic exploration of data.

- Efficient handling of large datasets.

- Flexible data representation adaptable to diverse visualization needs.

- User-friendly, accessible for beginners and experts.

- Free and open source.

Numpy

NumPy is a fundamental library for numerical computing in Python, offering efficient tools for handling and processing large, multi-dimensional arrays and matrices. It is a go-to for scientific computing tasks, powering many data science and machine learning libraries with its optimized array operations for mathematical computations and data manipulation.

One of NumPy’s core strengths is its ability to perform mathematical and logical operations on entire arrays without explicit loops, greatly speeding up computations. It integrates seamlessly with other libraries like SciPy, Pandas, and Matplotlib, supporting a robust ecosystem for scientific and analytical tasks. It is widely used for data preprocessing, feature extraction, handling large datasets, and implementing algorithms in a vectorized way.

Since NumPy is available as a standalone package, you can install it with the following command:

pip install numpyTo import NumPy, use:

import numpy as npOnce imported, you can access NumPy’s primary functions and classes:

# Create an array with a range of numbers

array = np.arange(10)

# Reshape an array into a matrix

matrix = array.reshape(2, 5)

# Generate an array of zeros

zeros = np.zeros((3, 3))

# Generate an array of random numbers

random_numbers = np.random.rand(4, 4)

# Calculate the mean of an array

mean_value = np.mean(array)

# Find the maximum value in an array

max_value = np.max(array)

# Compute the dot product of two matrices

dot_product = np.dot(matrix, matrix.T)

# Get unique elements in an array

unique_elements = np.unique(array)

This provides you with essential tools for efficient numerical operations and array management, making NumPy a cornerstone of scientific computing and data analysis in Python.

Benefits

- Fast numerical array operations.

- Memory-efficient storage.

- Broadcasting for flexible array shapes.

- Optimized element-wise functions.

- Smooth integration with SciPy, Matplotlib, Pandas.

Pandas

Pandas is an essential Python library for data manipulation and analysis, offering powerful tools to handle structured data in the form of tables. Its core structures, DataFrames and Series, allow for easy data loading, manipulation, and analysis, making it a go-to library in machine learning and general data processing tasks. Built on top of NumPy, Pandas ensures fast, efficient operations and integrates seamlessly with libraries like Matplotlib for data visualization.

Pandas provides flexible functionality to read from and write to various data sources, such as CSV, Excel, and SQL databases, supporting a broad range of data workflows. In machine learning, it is commonly used for data cleaning, feature engineering, and exploratory data analysis, making it essential for preparing data before model training. Its ability to handle missing values, perform aggregations, and create pivot tables makes it an indispensable tool for both simple and complex data analyses.

To install Pandas, use the following command:

pip install pandasTo import Pandas, use:

import pandas as pdOnce imported, you can create and manipulate DataFrames easily:

# Load dataset from CSV file

df = pd.read_csv('data.csv')

# Preview the first and last rows of the DataFrame

print(df.head()) # First 5 rows

print(df.tail()) # Last 5 rows

# Generate summary statistics for numerical columns

print(df.describe())

# Check for missing values in each column

print(df.isnull().sum())

# Fill missing values in a column with the column mean

df['column_name'] = df['column_name'].fillna(df['column_name'].mean())

# Convert categorical variables into dummy/indicator variables

df = pd.get_dummies(df, columns=['categorical_column'])

Benefits

- Intuitive syntax for data manipulation.

- Robust data cleaning and transformation tools.

- Flexible data selection and filtering.

- Efficient aggregation functions, like

groupbyandpivot. - Integrated visualization with Matplotlib.

PyTorch

PyTorch is an open-source deep learning library developed by Facebook’s AI Research lab, widely used due to its flexibility and efficient debugging capabilities Its tight integration with Python and NumPy also makes it easy for users to apply traditional scientific computing techniques within their models making it particularly popular among researchers and developers in machine learning.

Unlike some frameworks that rely on static graphs, PyTorch uses dynamic computation graphs, allowing developers to adjust the graph on the fly. This makes it highly adaptable for complex architectures like recurrent neural networks (RNNs) and natural language processing (NLP) models, where flexibility is essential. Overall, it provides a range of modules for creating and training neural networks, making it suitable for both beginners and advanced users.

To install PyTorch, use the following command:

pip install torchTo import PyTorch, use:

import torchHere is an example of a simple neural network using PyTorch:

import torch

import torch.nn as nn

import torch.optim as optim

# Define a simple neural network

class SimpleNN(nn.Module):

def __init__(self):

super(SimpleNN, self).__init__()

self.fc1 = nn.Linear(784, 128)

self.fc2 = nn.Linear(128, 10)

def forward(self, x):

x = torch.relu(self.fc1(x))

x = self.fc2(x)

return x

# Initialize model, loss function, and optimizer

model = SimpleNN()

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=0.001)

# Dummy input and output tensors

inputs = torch.randn(64, 784) # Batch of 64, 784 features (28x28 image flattened)

labels = torch.randint(0, 10, (64,)) # Random target labels

# Training step

optimizer.zero_grad() # Clear gradients

outputs = model(inputs) # Forward pass

loss = criterion(outputs, labels) # Calculate loss

loss.backward() # Backward pass

optimizer.step() # Update weightsThis example demonstrates a basic neural network with an input layer, one hidden layer, and an output layer, followed by typical training steps. PyTorch’s straightforward API makes it a powerful tool for rapid experimentation and model building, ideal for researchers and developers (advanced or beginners) alike.

Benefits

- Easy to learn with strong documentation.

- Boosts developer productivity with Python integration.

- Simple debugging with real-time computational graphs.

- Supports data parallelism for multi-CPU/GPU tasks.

- Rich ecosystem with tools for CV, NLP, and reinforcement learning.

SciPy

SciPy is an open-source Python library built on top of NumPy, designed for scientific and technical computing. With an extensive range of functions for mathematical operations, linear algebra, optimization, signal processing, and statistical analysis, SciPy is essential for researchers and developers working in data science and machine learning. It also integrates seamlessly with other libraries like Pandas, Matplotlib, and Scikit-Learn.

SciPy builds upon NumPy’s array operations by adding more specialized modules, such as scipy.stats for statistical functions, scipy.optimize for optimization algorithms, and scipy.integrate for solving integrals and differential equations. This makes it versatile and valuable for scientific research, prototyping, and machine learning tasks that require robust mathematical computations. In machine learning, SciPy is often used for tasks such as feature scaling, data transformations, clustering, and statistical analysis.

To install SciPy, use the following command:

pip install scipyTo import SciPy, use:

import scipy

from scipy import stats, optimize, integrateHere are some useful SciPy functions often used in machine learning:

import numpy as np

from scipy import stats, optimize, integrate

# 1. Statistical functions - Z-score normalization

data = np.array([1, 2, 3, 4, 5])

z_scores = stats.zscore(data)

print("Z-scores:", z_scores)

# 2. Optimization - Finding the minimum of a function

def f(x):

return x**2 + 5*np.sin(x)

result = optimize.minimize(f, x0=0)

print("Function minimum:", result.x)

# 3. Integration - Calculating the integral of a function

result, error = integrate.quad(lambda x: x**2, 0, 4)

print("Integral result:", result)This example demonstrates some of SciPy’s core capabilities in statistics, optimization, and integration. With SciPy, developers can leverage these powerful mathematical tools in a Pythonic way, making it an ideal library for complex data analysis and machine learning tasks.

Benefits

- Built on NumPy, ensuring efficient data processing.

- High-performance computing with C, C++, and Fortran integration.

- Extensive collection of sub-packages for scientific tasks.

- Easy to understand and use for scientific problems.

- Open-source with support for parallel programming.

Scikit-learn

Scikit-learn is a widely-used open-source machine learning library in Python that provides simple and efficient tools for data mining and data analysis. Built on top of NumPy, SciPy, and Matplotlib, it is designed to be accessible to both beginners and experienced practitioners, offering a broad selection of supervised and unsupervised learning algorithms.

Scikit-learn covers essential machine learning tasks, including classification, regression, clustering, and dimensionality reduction. Its user-friendly API enables quick implementation of these algorithms, making it ideal for prototyping and model experimentation. It also includes tools for model selection, cross-validation, and feature engineering, which are essential for developing robust machine learning workflows. It is frequently used for tasks such as data preprocessing, model training and evaluation, and hyperparameter tuning, often in conjunction with other libraries like Pandas and Matplotlib for data processing and visualization.

To install Scikit-learn, use the following command:

pip install scikit-learnTo import Scikit-learn, use:

from sklearn import datasets, model_selection, preprocessing, metrics

from sklearn.linear_model import LogisticRegression

from sklearn.ensemble import RandomForestClassifierHere is an example of a basic machine learning pipeline using Scikit-Learn:

from sklearn import datasets, model_selection, preprocessing, metrics

from sklearn.linear_model import LogisticRegression

from sklearn.ensemble import RandomForestClassifier

# Load a sample dataset

data = datasets.load_iris()

X, y = data.data, data.target

# Split the data into training and testing sets

X_train, X_test, y_train, y_test = model_selection.train_test_split(X, y, test_size=0.2, random_state=42)

# Data preprocessing - Standardize features

scaler = preprocessing.StandardScaler()

X_train = scaler.fit_transform(X_train)

X_test = scaler.transform(X_test)

# Initialize a classifier (e.g., Random Forest)

model = RandomForestClassifier(random_state=42)

model.fit(X_train, y_train) # Train the model

# Make predictions and evaluate the model

y_pred = model.predict(X_test)

accuracy = metrics.accuracy_score(y_test, y_pred)

print("Accuracy:", accuracy)

# Example with Logistic Regression

model = LogisticRegression()

model.fit(X_train, y_train)

y_pred = model.predict(X_test)

accuracy = metrics.accuracy_score(y_test, y_pred)

print("Logistic Regression Accuracy:", accuracy)This example demonstrates a typical machine learning workflow in Scikit-learn, including loading data, preprocessing, model training, and evaluation.

Benefits

- Free and open-source with minimal licensing restrictions.

- Easy to use with a user-friendly API.

- Backed by a large community of contributors and extensive documentation.

- Includes a broad selection of supervised and unsupervised learning algorithms.

- Built-in tools for feature extraction, dimensionality reduction, and cross-validation.

TensorFlow

TensorFlow, developed by Google Brain, is an open-source deep learning framework known for its flexibility, scalability, and suitability for both research and production applications. It provides a comprehensive ecosystem for end-to-end machine learning workflows, covering tasks from data preprocessing and model development to deployment and monitoring.

TensorFlow’s range of APIs offers something for all levels of expertise: high-level libraries like Keras make model building intuitive, while low-level operations enable fine-grained control for advanced computation. This adaptability allows TensorFlow to excel in diverse applications, including image recognition, natural language processing, and reinforcement learning. Supporting distributed training and deployment across a range of platforms, from mobile devices to cloud infrastructure, TensorFlow is highly adaptable for scalable and complex machine learning projects.

To install TensorFlow, use the following command:

pip install tensorflowTo import TensorFlow, use:

import tensorflow as tfHere’s an example of creating a simple neural network with TensorFlow:

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Flatten

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.datasets import mnist

from tensorflow.keras.utils import to_categorical

# Load and preprocess the MNIST dataset

(X_train, y_train), (X_test, y_test) = mnist.load_data()

X_train, X_test = X_train / 255.0, X_test / 255.0 # Normalize pixel values

y_train, y_test = to_categorical(y_train), to_categorical(y_test) # One-hot encode labels

# Build a simple neural network model

model = Sequential([

Flatten(input_shape=(28, 28)),

Dense(128, activation='relu'),

Dense(64, activation='relu'),

Dense(10, activation='softmax')

])

# Compile the model with an optimizer and loss function

model.compile(optimizer=Adam(), loss='categorical_crossentropy', metrics=['accuracy'])

# Train the model

model.fit(X_train, y_train, epochs=5, batch_size=32, validation_split=0.2)

# Evaluate the model

test_loss, test_accuracy = model.evaluate(X_test, y_test)

print("Test Accuracy:", test_accuracy)In this example, TensorFlow is used to load the MNIST dataset, preprocess it, build a neural network model, and evaluate its performance. TensorFlow’s extensive tools and ease of use, combined with its scalability, make it a powerful choice for developing and deploying machine learning models across various domains and devices.

Benefits

- Scalable across multiple devices, from mobile to complex systems.

- Free and open-source, available for anyone to use.

- Superior data visualization with its graph-based architecture.

- Debugging made easy with TensorBoard.

- Supports parallelism using GPU and CPU systems.

- Compatible with several programming languages, including Python, C++, and JavaScript.

- Utilizes TPUs for faster computation compared to GPUs and CPUs.

XGBoost

XGBoost, a powerful tool for structured or tabular data, is built on the gradient boosting framework and stands out for its efficiency, scalability, and accuracy. Known for consistently high performance in data science competitions and real-world applications, it has become a go-to choice for machine learning tasks.

XGBoost integrates seamlessly with multiple programming languages, including Python, R, and Java, and is optimized for large datasets. It is widely used for machine learning tasks such as classification, regression, and ranking, offering features like regularization to prevent overfitting, parallelization for faster processing, automatic handling of missing and sparse data, and robust support for distributed computing. These features provide both speed and predictive power, making XGBoost ideal for use cases from financial modeling to recommendation systems and beyond.

To install XGBoost, use the following command:

pip install xgboostTo import XGBoost, use:

import xgboost as xgbHere’s an example of a classification task using XGBoost with the Iris dataset:

from xgboost import XGBClassifier

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

# Load the Iris dataset

data = load_iris()

X, y = data.data, data.target

# Split the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Initialize the XGBoost classifier

model = XGBClassifier(use_label_encoder=False, eval_metric='mlogloss')

# Train the model

model.fit(X_train, y_train)

# Make predictions and evaluate the model

y_pred = model.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

print("XGBoost Classifier Accuracy:", accuracy)This example demonstrates a basic classification workflow in XGBoost, from loading data and training the model to evaluating its accuracy. With its powerful implementation of gradient boosting, XGBoost is highly effective in producing state-of-the-art results in machine learning competitions and industry applications, especially when dealing with structured data.

Benefits

- High performance

- Scalable for large datasets, ensuring efficiency in training.

- Highly customizable with a wide range of hyperparameters.

- Built-in support for handling missing values in real-world data.

- Provides feature importance for better interpretability of models.

Conclusion

Choosing the right machine learning library can significantly impact the success and efficiency of your project. In this article, we reviewed some of the best libraries available today, each with its own strengths and specialized capabilities. From Scikit-Learn for traditional ML algorithms to TensorFlow and PyTorch for deep learning, and specialized tools like NLTK for NLP, these libraries offer essential resources to bring your machine learning projects to life. By experimenting with these libraries, you’ll not only enhance your technical skills but also open the door to a world of possibilities for building powerful, intelligent applications.

Related Reading:

- How to Learn AI For Free: 2024 Guide From the AI Experts

- What is Cost Function in Machine Learning? – Explained

- Top 50 Python Interview Questions and Answers (2024)

- What is Generative AI, ChatGPT, and DALL-E? Explained

FAQs

What is a machine learning library?

A machine learning library is a collection of pre-written code and functions that provides developers with tools for creating, training, and deploying machine learning models. These libraries handle a range of tasks, from data processing and feature extraction to model building, evaluation, and optimization. Machine learning libraries help streamline workflows, allowing developers to focus on improving model performance rather than implementing algorithms from scratch.

Which library is used in ML?

Popular libraries include Scikit-Learn for traditional machine learning algorithms, TensorFlow and PyTorch for deep learning, and XGBoost and LightGBM for gradient boosting. Each library offers specialized features; for example, TensorFlow and PyTorch are widely used for neural network-based models, while Scikit-Learn is known for its simplicity and comprehensive set of algorithms for standard machine learning tasks.

What are the 4 types of machine learning?

1. Supervised Learning – The model is trained on labeled data, learning to map inputs to specific outputs.

2. Unsupervised Learning – The model works with unlabeled data, aiming to find patterns or groupings within the data.

3. Semi-Supervised Learning – A combination of labeled and unlabeled data is used for training, allowing the model to leverage both supervised and unsupervised methods.

4. Reinforcement Learning – The model learns by interacting with an environment, receiving feedback in the form of rewards or penalties to optimize a sequence of decisions.

What are the libraries in Python for machine learning?

Scikit-Learn – Ideal for basic ML algorithms (e.g., linear regression, clustering).

TensorFlow – Popular for deep learning and neural networks.

PyTorch – Another top choice for deep learning, favored for its dynamic computation graph.

Keras – High-level API often used with TensorFlow for building neural networks.

XGBoost and LightGBM – Specialized in gradient boosting for structured data.

NLTK and SpaCy – Focused on natural language processing tasks.

Pandas – Essential for data preprocessing and manipulation, though not specifically a machine learning library.