Table of Contents

Reinforcement Learning from Human Feedback (RLHF) is a machine learning technique that incorporates human feedback to improve the behavior and performance of AI models. It combines reinforcement learning, where an agent learns through trial and error by receiving rewards or punishments, with human evaluation, ensuring that the AI’s actions are aligned with human preferences.

RLHF is better suited to tasks where the goals are complex, difficult to define, or subjective, such as determining the aesthetic appeal of an image or the creativity in a piece of writing. While defining concepts like “beauty” or “creativity” mathematically might be impractical, humans can easily evaluate these aspects. Thus, human feedback is transformed into a reward function that guides the AI’s behavior and helps it optimize outcomes that are not only technically accurate but also align with human preferences.

What is Reinforcement Learning?

It is important that you revise the basics of reinforcement learning (RL) before moving on to a more complex topic.

Reinforcement learning is a branch of machine learning where an agent learns to make decisions by interacting with an environment. It is based on the concept of trial and error: the agent takes actions in the environment, receives feedback in the form of rewards or penalties, and adjusts its future actions to maximize cumulative rewards over time.

Whew, that’s a lot of technical jargon. Let’s break down the concept using the example of a classic game – Super Mario.

Agent

Definition: The AI model or system trying to learn.

In Super Mario, the agent is the AI system controlling Mario. Its objective is to navigate through various levels, overcome obstacles, defeat enemies, and ultimately reach the flagpole at the end of each level.

Environment

Definition: The scenario or world the agent interacts with.

The environment consists of the entire Super Mario game world, including platforms, power-ups, enemies, and hazards. This dynamic environment is filled with challenges and opportunities that the agent must navigate to succeed.

Actions

Definition: The moves or decisions the agent makes in the environment.

The actions in Super Mario include jumping, running, crouching, and using power-ups. Each action affects Mario’s position and interactions within the environment, such as jumping on enemies to defeat them or collecting coins.

Rewards

Definition: Positive or negative feedback the agent receives after taking an action.

Rewards in Super Mario are given for various achievements. For example, collecting coins or power-ups provides positive rewards, while losing a life or falling into a pit results in negative feedback. Reaching the end of a level or defeating a boss grants significant rewards.

Goal

Definition: Maximize the total reward over time by learning the best actions.

The agent’s goal is to maximize its total reward by learning which actions yield the best outcomes in different situations. Over time, the AI learns the optimal paths and strategies to complete levels, collect the most coins, and ultimately defeat Bowser.

This example illustrates how reinforcement learning allows an agent to interact with a rich environment like Super Mario, where it learns from its actions and their consequences to improve its gameplay strategy over time.

How does RLHF Works?

Let’s understand the process of how human feedback is used in reinforcement learning.

Data Collection

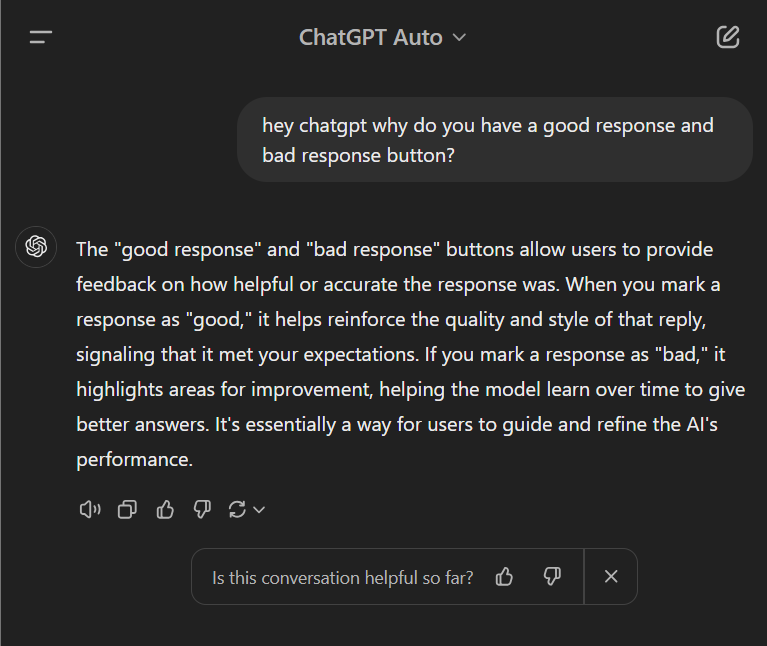

The process of Reinforcement Learning from Human Feedback (RLHF) begins with gathering human input on the AI’s outputs. This involves having human annotators rank or score responses generated by the AI based on certain criteria like fluency, coherence, or accuracy. This data forms the foundation of RLHF, allowing the AI to understand what constitutes a “good” response.

Reward Model Creation

One of the most crucial steps in RLHF is building the reward model. This model is trained on human feedback rankings and is used to evaluate and score future AI responses. The reward model essentially quantifies human preferences, assigning higher rewards to responses that humans rated favorably. For instance, if human feedback suggests that more context in an answer is preferable, the reward model will assign a higher score to responses with better contextual accuracy. The reward model functions as a guiding principle for the AI’s future learning and decision-making.

Fine Tuning

The AI model is initially pre-trained on a large dataset and then fine-tuned using human feedback. This fine-tuning process relies on supervised learning techniques, where the model is trained to mimic high-quality outputs based on human rankings or scores. By learning to prioritize human-preferred responses, the model becomes better aligned with human-like behaviors. This fine-tuning phase is critical, as it adjusts the model’s behavior before reinforcement learning begins, ensuring it can better imitate human preferences and judgments.

Reinforcement Learning

Once the reward model is in place, the AI uses reinforcement learning to optimize its behavior. This phase involves the AI continuously interacting with the environment, generating outputs, and receiving feedback from the reward model. Over time, the AI refines its responses to maximize its rewards, improving with each iteration. Through this process of trial and error, the model learns to meet human expectations more effectively, producing outputs that are not only accurate but also align with human judgment on subjective qualities like creativity or nuance.

RLHF blends human intuition with the power of machine learning to ensure that AI models are trained not only on data but also on human values and preferences. This iterative process allows models to continuously improve, becoming more helpful and aligned with human expectations in complex and subjective tasks.

Applications of RLHF

RLHF is primarily used in applications where human preferences are subjective or difficult to define with hard rules. Here are some of the key areas where RLHF has been impactful:

- Generative AI: In models that generate text, such as chatbots or translators, RLHF helps create responses that are not only correct but also sound natural and engaging.

For example, in ChatGPT or Google Bard, RLHF is used to refine chatbot responses. Human feedback helps adjust the tone, making the AI sound more empathetic or conversational in customer service interactions. Human evaluators can rate responses for friendliness and clarity, which trains the AI to provide more helpful and natural answers. - AI Image Generation: RLHF is applied to text-to-image models, where human feedback is essential for judging whether the generated images match human expectations.

In tools like DALL-E 2 and MidJourney, RLHF is crucial for generating images that meet user expectations. For example, if a user requests “a futuristic city at sunset,” the AI might generate several images, and human feedback can help refine the model to produce images that best capture the requested atmosphere, color schemes, and architectural elements. - Video Game Agents: RLHF is also used to train AI agents in video games to behave in ways that enhance user experience, based on feedback from players.

For example, in Dota 2, RLHF was used to train bots to play at human levels of expertise. Professional gamers provided feedback on the bots’ decision-making and strategy, helping to create AI opponents that mimic real player behaviors and improve over time through human-driven learning.

Limitations of RLHF

While RLHF has shown significant success in fine-tuning AI agents for complex tasks, it has several limitations that can impact its efficiency and even reliability.

- Costly Human Feedback: Gathering human preferences can be resource-intensive, both in terms of time and money. Since human feedback is essential for creating a reward model, the high costs of collecting and processing this data limit how widely RLHF can be scaled. Some research suggests that Reinforcement Learning from AI Feedback (RLAIF), which replaces human input with AI-generated feedback, could mitigate these costs, but this approach is still in its infancy.

- Subjectivity of Human Preferences: Human feedback is inherently subjective, making it difficult to establish a universal definition of what “high-quality” output should be. People can disagree not only on facts but also on what constitutes appropriate behavior for an AI. This lack of consensus creates ambiguity, leading to inconsistent training signals.

- Fallibility of Human Feedback: Human evaluators can provide flawed or malicious feedback. For example, evaluators may unintentionally introduce biases, or in some cases, adversaries might attempt to “troll” the system with bad inputs. This problem has been studied in detail, and some methods have been proposed to identify and mitigate the impact of malicious data, but the issue remains a significant challenge.

- Risk of Overfitting and Bias: RLHF systems are prone to overfitting on the biases of a specific demographic group from which feedback is gathered. If the feedback primarily comes from a narrow demographic, the model may struggle when applied to more diverse user bases or to different types of queries. As a result, RLHF can amplify inherent biases present in the training data.

- Scalability Issues: RLHF requires an efficient way to integrate human feedback at scale. Training with human input tends to be slower than purely data-driven approaches. Furthermore, integrating this feedback effectively into the model without causing significant divergence from the original model’s performance can be difficult.

These limitations highlight that while RLHF has opened new avenues for human-guided AI development, it still faces some significant problems, especially when it comes to cost, bias, and alignment with user expectations.

Conclusion

Reinforcement Learning from Human Feedback (RLHF) presents an innovative way of aligning AI behavior with human preferences, allowing models to generate more human-like and contextually appropriate responses. Its power lies in addressing complex, subjective tasks like creativity or aesthetics, where traditional rule-based models struggle. By integrating human evaluations into reinforcement learning, RLHF enables AI systems to evolve based on human-defined reward functions.

However, RLHF also faces challenges such as the high cost of gathering human input, the inherent subjectivity of human preferences, and the risk of overfitting and bias. Additionally, it raises questions about scalability and the risk of malicious feedback from users.

Despite these limitations, RLHF holds promise for applications in areas ranging from generative AI to video game agents, where nuanced human feedback is essential for improving AI performance.

FAQs

What is Reinforcement Learning from Human Feedback (RLHF)?

RLHF is a machine learning technique where AI models are trained using human feedback as part of the reward function. In traditional reinforcement learning, an AI learns by receiving rewards or penalties based on its actions. In RLHF, human evaluators provide feedback on the AI’s outputs, which is then used to guide and improve the model’s performance, particularly in complex or subjective tasks like natural language processing or creativity assessment.

What are the biggest challenges in implementing RLHF?

Key challenges include the high cost of gathering human feedback, subjectivity in human evaluations, the risk of bias or overfitting to specific demographics, and difficulties in scaling the process efficiently.

How is RLHF applied in real-world applications?

RLHF is used in areas like generative AI for improving chatbot responses, AI image generation to match human expectations, and AI-assisted tools such as code review assistants, where human feedback refines performance.