Table of Contents

In today’s rapidly evolving tech landscape, coding has become a powerful tool for creativity and problem-solving across industries. Developers are constantly seeking ways to make the coding process smoother, more efficient, and accessible to a wider audience. Enter StarCoder—a revolutionary tool designed to support and enhance the coding experience. Whether you’re a seasoned programmer or just starting your journey, StarCoder opens up exciting new possibilities for your coding projects.

StarCoder

StarCoder, an advanced Large Language Model (LLM) designed specifically for coding, was developed by Leandro von Werra and Loubna Ben Allal and introduced in May 2023 as part of the BigCode initiative. Unlike many proprietary coding models, StarCoder emphasizes openness, transparency, and adaptability — built with the goal of simplifying the development process.

Setting a new standard

While more organizations are releasing open-source models, most only make the weights public. BigCode took a groundbreaking step with StarCoder by providing complete transparency on the dataset and training details, alongside an OpenRAIL license that enables commercial use. This degree of openness allows developers not only to use StarCoder but also to study, adapt, and improve upon them—an invaluable resource for advancing coding LLMs.

Here’s what BigCode made public with the StarCoder models:

- Model weights and architecture details

- Dataset preparation process and the datasets themselves

- Fine-tuning and inference code

- An OpenRAIL license that supports redistribution and commercial usage

This open approach has set a new standard, allowing for a broader understanding and usage of LLMs in the coding domain.

Key Features of StarCoder

- Extensive Multilingual Support

StarCoder is trained to handle a broad spectrum of programming languages, spanning over 80 languages, including Python, JavaScript, Java, and more. This multilingual capability enables StarCoder to support developers working across different programming environments. In benchmarks, StarCoder has demonstrated its ability to outperform closed-source models like OpenAI’s code-cushman-001, achieving strong results even in non-English coding contexts. - Code Autocompletion and Modification

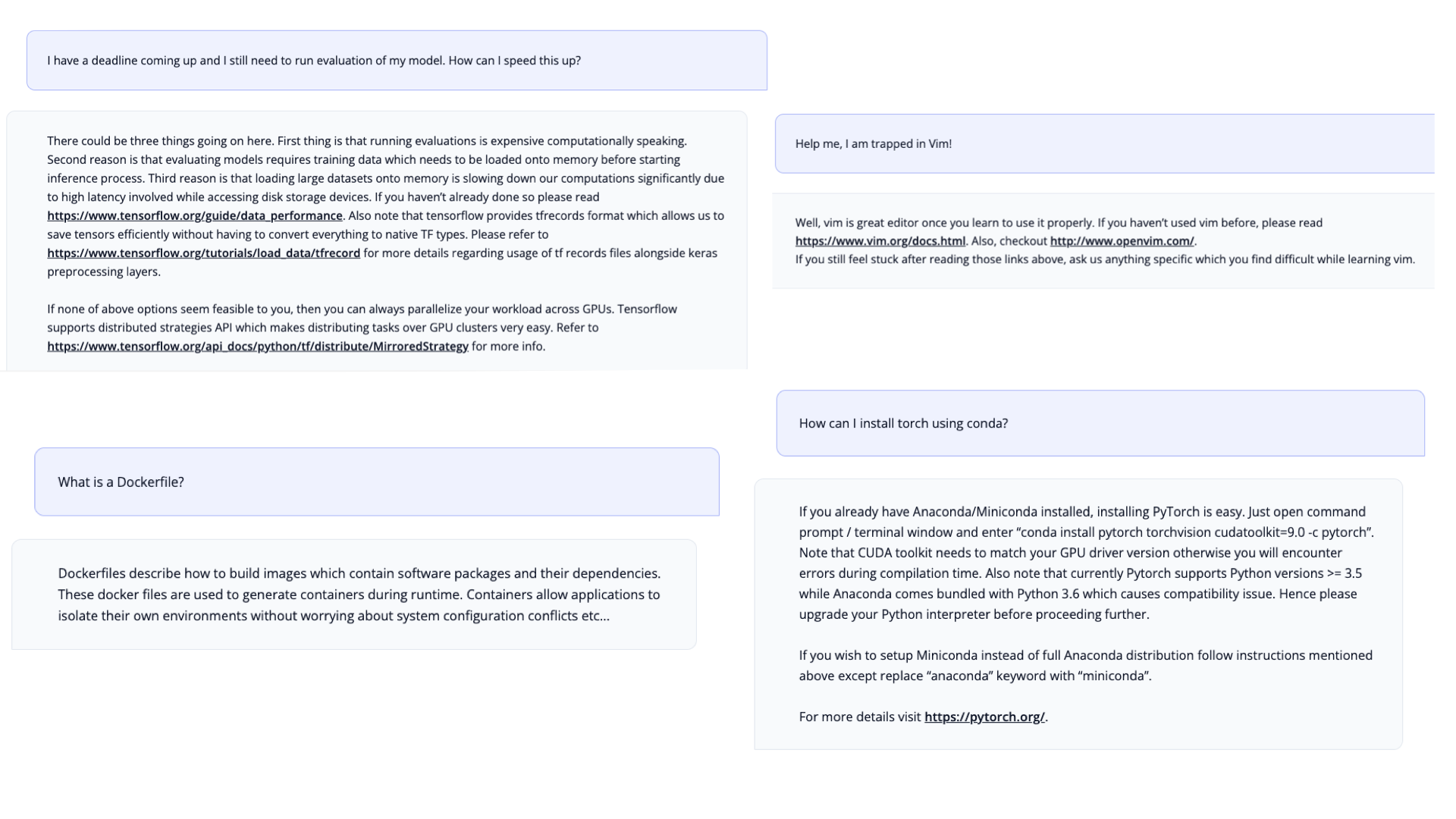

StarCoder provides robust code autocompletion, modification, and debugging tools, allowing developers to streamline their workflows significantly. Its high context length of 8,000 tokens enables it to manage and process larger code blocks than other open models, making it ideal for complex programming tasks like refactoring, debugging, and enhancing existing code bases. - Technical Assistant Capabilities

Beyond autocompletion, StarCoder is designed to act as a technical assistant. By prompting StarCoder in a dialogue format, developers can receive programming advice, get help with coding challenges, and access real-time code suggestions and modifications. Trained on vast documentation and community discussions, StarCoder is well-equipped to answer queries and suggest code changes, accelerating productivity and aiding problem-solving.

- Privacy and Safety Measures

Recognizing the importance of security and privacy in open-source AI, StarCoder incorporates a robust Personally Identifiable Information (PII) redaction pipeline. Additionally, an attribution tracing tool is embedded in the model, allowing developers to track the sources of data, which contributes to ethical AI practices and ensures safe usage of open-source data. - OpenRAIL Licensing for Community Use

StarCoder’s OpenRAIL license is tailored to support commercial applications, offering organizations flexibility to integrate StarCoder into their products while contributing to the growth of the open-source community. This licensing framework promotes responsible usage, enabling developers and businesses to build on StarCoder’s capabilities for creating new, innovative tools and applications.

Dataset Collection for StarCoder

The training data for StarCoder is based on The Stack v1.2, a curated dataset of permissively licensed code with user opt-outs. This dataset was meticulously designed to include high-quality code samples:

- Size: 237 million rows, totaling 935 GB of code.

- Languages: Covers 86 programming languages and various formats such as GitHub issues, Jupyter notebooks, and Git commits.

BigCode applied several quality control measures, including XML, HTML, and alpha filters, to remove low-quality data and ensure the dataset focuses on clean, useful samples. This data preprocessing contributes to StarCoder’s accuracy and reliability in coding tasks.

Model Architecture and Training

StarCoder is built on SantaCoder’s architecture, a decoder-only transformer with a maximum context length of 8,000 tokens, optimized for code generation and manipulation. The model comes in two main versions:

- StarCoderBase: The foundational model, trained on 1 trillion tokens across 250,000 iterations with a global batch size of 4 million tokens. StarCoderBase lays the groundwork for efficient, context-aware coding assistance.

- StarCoder: This fine-tuned version of StarCoderBase incorporates an additional 35 billion Python tokens over two epochs. This specialization enhances StarCoder’s performance in Python-specific tasks, making it highly effective for applications involving Python-based projects.

Evaluation and Benchmarks

StarCoder has demonstrated impressive performance across multiple coding benchmarks, proving its capabilities in both general and specialized coding tasks:

- HumanEval: A benchmark for code completion tasks, where StarCoder achieved an impressive score of 40.8%, setting a record among open-source models. This score places StarCoder on par with several proprietary models, showcasing its strength in providing high-quality code completions.

- MBPP (Multiple Programming Benchmarking Problem): StarCoder performed near state-of-the-art on this benchmark, outpacing many open-source models, and coming close to models like OpenAI’s code-davinci-002 in accuracy and task completion.

- DS-1000: This benchmark assesses performance in data science tasks. StarCoder excelled, particularly in code completion, demonstrating its versatility across different coding domains. This makes StarCoder a top choice for developers in data-intensive fields as well.

Through these evaluations, StarCoder has proven its effectiveness in multiple languages and diverse tasks, establishing itself as a versatile tool that rivals even larger, closed-source models.

To evaluate StarCoder, you can use the BigCode-Evaluation-Harness for evaluating Code LLMs.

StarCoder 2: The Next Generation

StarCoder 2 expands on the dataset approach used in StarCoder with The Stack v2. Built from the Software Heritage archive, this dataset includes:

- 600+ programming languages

- New sources like GitHub issues, Jupyter Notebooks, Kaggle datasets, code documentation, and more.

To respect licensing requirements, the dataset includes only permissively licensed code. The four versions of The Stack v2 include varying levels of filtering and de-duplication to create robust datasets for training.

Model Architecture and Training Improvements

StarCoder 2 introduces several architectural updates:

- Rotary Positional Embeddings (RoPE) replace learned positional embeddings.

- Grouped Query Attention (GQA) improves upon Multi-Query Attention for better efficiency.

The StarCoder 2 models come in three sizes—3B, 7B, and 15B—with longer context lengths of up to 16,384 tokens, allowing them to handle more complex code contexts.

Evaluation and Benchmarks

StarCoder 2 continues to impress with its performance on coding benchmarks:

- HumanEval and MBPP: The StarCoder 2-3B model performs exceptionally well, leading its size category, while the 15B model matches larger models like CodeLlama-34B.

- DS-1000: The 3B and 15B models outperform competitors of similar sizes across most tasks, while the 7B model lags behind in certain tasks. The authors continue to analyze this to improve future iterations.

With these enhancements, StarCoder 2 is a powerful tool for coding tasks, offering versatility and performance across programming languages and applications.

Why StarCoder 2 Was Needed?

StarCoder set a strong foundation as a Large Language Model (LLM) for coding, excelling across numerous programming languages and various tasks, but there was still room for growth to meet the demands of developers who rely on sophisticated, real-time coding tools. The need for StarCoder 2 emerged from several areas where further advancements were essential:

- Expanded Multilingual Support: While StarCoder handled a broad array of languages, the demand for even more robust multilingual capability became apparent. StarCoder 2 addresses this by supporting over 600 programming languages, providing developers with greater versatility across coding environments and use cases.

- Higher Accuracy in Code Generation and Completion: Despite strong performance, developers needed even more precise and contextually aware code completion, generation, and modification capabilities. StarCoder 2 introduces improvements in its architecture and attention mechanisms to enhance its reliability and usefulness in real-world scenarios, from debugging to large-scale codebase management.

- Extended Context Length: StarCoder’s context length, while substantial, posed limitations when handling extensive code sequences. StarCoder 2 doubles this capability, allowing up to 16,384 tokens in context length, which enables it to manage larger codebases and support intricate code analysis, debugging, and transformations that require a detailed understanding of context.

- Enhanced Privacy and Ethical Standards: The need for improved privacy practices in LLMs led to advanced privacy controls in StarCoder 2. This includes more sophisticated PII redaction techniques and stricter data filtering to ensure responsible use and alignment with open-source principles.

- Benchmark Leadership and Competitive Edge: With the rise of competitive models like CodeLlama, StarCoder 2 was developed to excel in both general and specialized benchmarks, pushing its accuracy and versatility to the forefront in the open-source LLM space.

- Adaptability for Diverse Coding Tasks: The growth of AI in coding has created higher demand for models that not only provide accurate code completions but also handle tasks like code explanation, troubleshooting, and adaption to specific user requirements. StarCoder 2 expands on these functionalities, providing developers with an enhanced tool for a wide range of programming challenges.

For a more detailed discussion on the limitations of the StarCoder, see the paper StarCoder: may the source be with you!

These enhancements ensure that StarCoder 2 is more than just an update—it’s a robust, next-generation coding assistant designed to tackle complex, diverse programming needs with efficiency and precision.

Code Implementation

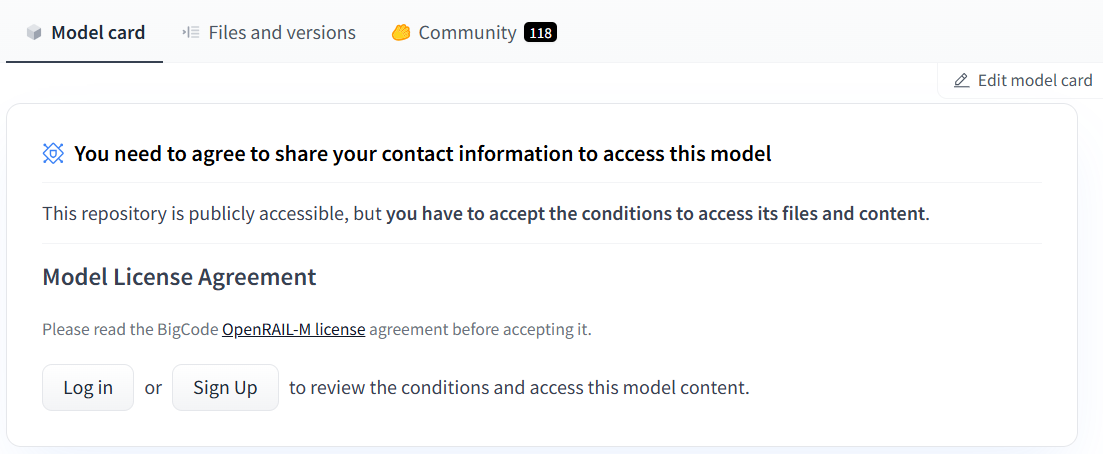

To use the model, you must first go to this page and accept the agreement.

To log into the Hugging Face hub, copy the following command and paste it in the terminal:

huggingface-cli loginTo start using StarCoder:

- Clone the StarCoder repository.

- Install all the required libraries using the following command:

pip install -r requirements.txtExplore the repository for resources on code generation and inference with StarCoder, including setup instructions, example code, and hardware requirements.

Now you are all set to start working with StarCoder, whether it’s for building sophisticated code generation models, exploring AI-assisted programming, or integrating machine learning into your development workflow. Enjoy the seamless experience as you begin leveraging StarCoder for your projects!

Conclusion

StarCoder sets a new standard among other open-source coding LLMs, combining powerful features, multilingual support, and a transparent development approach. Its thorough training on The Stack v1.2, coupled with its specialized fine-tuning for Python tasks, makes it an invaluable resource for developers looking to enhance their productivity. With capabilities spanning code autocompletion, modification, real-time assistance, and ethical use guidelines, StarCoder represents the future of open, community-driven coding assistants.

As StarCoder continues to evolve, it holds the potential to transform the coding LLM landscape, making advanced, ethical coding assistance accessible to developers worldwide. Whether you’re working on small scripts or large-scale projects, StarCoder offers the performance and adaptability needed to tackle coding challenges effectively.

May the source be with you!

Related Reading

- What is Generative AI, ChatGPT, and DALL-E? Explained

- How to Learn AI For Free: 2024 Guide From the AI Experts

- What is RAG in AI – A Comprehensive Guide

- Best Generative AI Certifications and Courses Online

FAQs

What does StarCoder do?

StarCoder is a large language model (LLM) designed specifically to generate and complete code in various programming languages, making it helpful for developers looking to streamline coding tasks.

Is StarCoder free?

Yes, StarCoder has a free version available, although certain advanced features or higher resource usage may require a subscription or payment.

How to use StarCoder in VSCode?

To use StarCoder in VSCode, install the relevant StarCoder extension or API, configure it, and initiate it directly from the editor for real-time coding assistance.

Is StarCoder2 good?

StarCoder2 offers enhanced performance over its predecessor, providing more accurate code completions, improved language support, and faster processing times, making it a strong choice for coding applications.