Table of Contents

Generative AI has taken significant strides in recent years, evolving from models that simply generate text based on pre-learned data to more sophisticated techniques that enhance their reliability and accuracy. One such innovation is Retrieval-Augmented Generation (RAG). In essence, RAG enriches generative AI models by enabling them to fetch and incorporate external information in real-time, resulting in more informed and trustworthy responses.

In this article, we will dive deeper into the concept of RAG, how it works, and why it matters in today’s AI landscape.

Origin of RAG

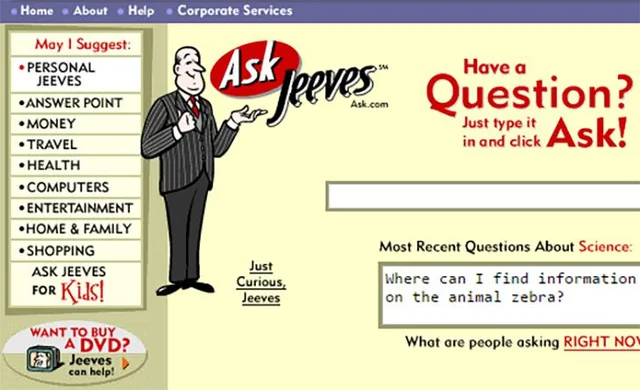

The origin of RAG can be traced back to early developments in question-answering systems, which began in the 1970s. These systems used rudimentary natural language processing (NLP) techniques to access specific databases for narrow topics. A notable example is the search engine Ask Jeeves (later rebranded as Ask.com), which allowed users to ask questions in plain language.

While these early systems were groundbreaking, they lacked the sophistication of modern retrieval-augmented generation. The fundamental idea—using external data to augment a system’s knowledge—remains consistent, but today’s RAG technology benefits from significant advancements in NLP, machine learning, and neural networks. The integration of retrieval and generation in real-time, as well as the use of massive vector databases, allows modern AI to provide more accurate, contextually relevant, and nuanced answers than its predecessors.

What is Retrieval-Augmented Generation (RAG)?

Retrieval-Augmented Generation is a method that enhances the performance of large language models (LLMs) by retrieving relevant facts and data from external sources during the generation process. While traditional LLMs like GPT-3.5 or GPT-4 are highly capable of responding to general queries, they are limited by the scope of the data they were trained on, which could be outdated or incomplete. RAG overcomes this limitation by integrating real-time retrieval from knowledge bases, databases, or other external resources, thus augmenting the generative power of the AI with up-to-date, domain-specific information.

In simpler terms, think of RAG as a collaborative process between an AI model and an external knowledge source. The AI acts as a conversationalist, and whenever it needs specific or fresh data, it turns to external sources (like a library or database) to fetch information that enhances the accuracy and relevance of its responses.

How Does RAG Work?

RAG is a sophisticated technique that combines generative AI capabilities with external information sources to enhance the accuracy and reliability of the responses provided by LLMs. The RAG process unfolds in several structured steps, which can be understood more deeply as follows:

- User Query

The process begins when a user submits a question or a prompt to the generative AI model. This initial user input is crucial as it sets the context for the subsequent operations. Unlike traditional generative AI, which relies solely on its pre-existing knowledge base, RAG introduces a dynamic element by enabling the model to seek real-time information from external resources. - Query Transformation

Once the query is received, the generative AI model transforms it into a numerical format, often referred to as an embedding or vector. This transformation involves complex mathematical operations that allow the query to be processed by machines. The embedding serves as a compact representation of the query, capturing its semantic meaning and context in a form that can be efficiently compared against a vast array of external data. - Information Retrieval

With the user query now in a machine-readable format, RAG employs powerful search algorithms to conduct a relevancy search against an indexed external knowledge base. This database can encompass a variety of sources, such as web pages, knowledge bases, APIs, or document repositories. The retrieval process may involve sophisticated techniques, such as semantic search engines, which utilize embeddings stored in vector databases. These systems are designed to rank and rewrite queries effectively, ensuring that the retrieved information is not only relevant but also answers the user’s question.

Once the search is complete, the relevant information is extracted and undergoes pre-processing steps, such as tokenization, stemming, and the removal of stop words. These processes prepare the data for seamless integration into the LLM. - Augmenting the LLM Prompt

After retrieving the relevant information, the next step is to augment the initial user input with the newly acquired data. This augmentation is achieved through prompt engineering techniques, which refine how the LLM interprets the combined input of the user query and the relevant external knowledge. By embedding this additional context into the LLM’s input, the model gains a more comprehensive understanding of the topic at hand. This augmented prompt allows the LLM to generate more accurate, informative, and contextually relevant responses. - Response Generation

Armed with both the retrieved information and its internal knowledge, the LLM proceeds to generate a final answer to the user’s query. This response can integrate citations or references to the external sources that contributed to its construction, significantly enhancing the credibility and trustworthiness of the output. By allowing users to verify the information, RAG helps mitigate issues like AI hallucinations—instances where the model produces incorrect or fabricated responses. - Updating External Data

A common challenge in utilizing external data is ensuring its freshness and relevance. To maintain up-to-date information, organizations can implement automated processes that asynchronously update the documents and their corresponding embeddings. This real-time or periodic batch processing ensures that the generative AI model can draw from current and accurate data, enhancing the overall effectiveness of the RAG system.

Through these structured steps, retrieval-augmented generation transforms how generative AI models interact with information. By integrating real-time data retrieval with internal knowledge, RAG not only improves the precision of the responses generated but also builds user trust through transparency and verifiability. This innovative approach holds the potential to revolutionize applications across various domains, enabling more intelligent and responsive AI systems.

Benefits of RAG

RAG technology offers several key advantages that enhance the performance and reliability of generative AI systems:

Cost-Effective Implementation: RAG eliminates the need for expensive retraining of large language models (LLMs) by enabling them to access external data sources in real-time. This makes it more affordable and accessible for organizations to integrate domain-specific information into their AI applications.

Up-to-Date Information: Unlike traditional LLMs with static data, RAG ensures models always retrieve the latest information, whether it’s news, research, or social media updates, enhancing the relevance of responses.

Enhanced User Trust: By providing source attribution and citations, RAG increases the transparency of AI-generated content. Users can verify information, boosting trust and confidence in the system’s responses.

Greater Developer Control: Developers can fine-tune RAG by controlling the sources of information, ensuring more accurate, tailored responses while easily addressing issues such as outdated or sensitive data retrieval.

RAG empowers AI to deliver more reliable, flexible, and accurate responses.

Applications of RAG

RAG has vast potential across a wide range of industries, transforming how organizations enhance their AI-powered tools. Below are some of the key areas where RAG is already making a significant impact:

- Healthcare: Medical professionals can leverage RAG to access up-to-date research studies, patient histories, and clinical guidelines. By integrating patient data with external medical databases, doctors and nurses can make more informed and timely decisions, improving patient care and outcomes.

- Finance: RAG-equipped AI tools allow financial analysts to pull real-time market data, industry reports, and breaking news, enabling them to make well-informed predictions and investment strategies. The ability to blend internal financial models with external data sources gives analysts a competitive edge in responding to market fluctuations.

- Customer Support: Businesses are turning internal documentation, policy manuals, and support logs into knowledge bases that RAG models can access to provide more personalized and accurate customer support. This approach results in faster resolutions, improved efficiency, and increased customer satisfaction. RAG-powered virtual assistants can also streamline employee training by providing immediate access to relevant company policies and procedures.

- Content Creation: RAG helps journalists, writers, and researchers gather real-time facts, statistics, and data from a variety of sources, enhancing the accuracy and depth of reports or articles. By combining generative capabilities with up-to-date, retrieved information, RAG assists in creating well-researched blog posts, articles, and even product catalogs.

- Market Research: RAG models can gather insights from the vast array of data available online, including news, industry reports, and social media posts. This capability enables businesses to stay on top of market trends, understand competitor behavior, and make data-driven strategic decisions with confidence.

- Sales Support: By acting as virtual sales assistants, RAG models can pull product information, specifications, pricing, and customer reviews, helping sales teams address customer inquiries more effectively. The AI can also offer personalized product recommendations, guide customers through the purchase process, and improve the overall shopping experience.

- Employee Experience: RAG models can centralize company knowledge, giving employees instant access to information about internal processes, benefits, and organizational culture. By integrating with internal databases and documents, RAG streamlines workflows and enhances employee productivity by answering questions with precise and up-to-date information.

In each of these applications, RAG bridges the gap between generative AI models and real-time, external knowledge, significantly improving the accuracy and relevance of AI-generated outputs.

RAGs vs. LLMs

RAG represents a significant evolution beyond standard LLMs. While LLMs are pre-trained on vast datasets and generate responses solely based on their training data, RAG models integrate an additional step—retrieval of external information.

| Aspect | LLMs | RAGs |

|---|---|---|

| Access to Knowledge | Relies entirely on pre-trained data, which is static. | Retrieves real-time external data, ensuring up-to-date responses. |

| Accuracy | May provide outdated or inaccurate responses for recent or specific queries. | Fetches relevant external data, improving accuracy and precision. |

| Transparency | No citations or references, making it hard to verify facts. | Provides source attribution, increasing transparency and trust. |

| Training Efficiency | Requires extensive retraining and computational resources for updates. | Leverages external databases, reducing the need for retraining. |

| Response Generation | Based solely on the model’s internal knowledge. | Combines internal knowledge with retrieved external information for richer output. |

By incorporating retrieval mechanisms, RAG models complement LLMs’ generative capabilities with real-time knowledge retrieval, producing more accurate, context-aware, and reliable responses.

Conclusion

RAG represents a major leap forward in the evolution of generative AI. By combining the creative strengths of large language models with real-time data retrieval, RAG ensures more accurate, reliable, and context-aware responses. This hybrid approach addresses key limitations of traditional models, like outdated information and hallucinations, making it a vital tool across industries such as healthcare, finance, and customer support.

As AI applications demand greater transparency and trust, RAG’s ability to cite sources and provide up-to-date information makes it a valuable asset. It not only improves factual accuracy but also opens the door to more meaningful, data-driven interactions. In a world where information is constantly evolving, RAG empowers AI systems to stay current and deliver more relevant insights, marking an exciting step toward smarter, more dependable AI.

Related Reading:

- How to Use the ChatGPT API for Building AI Apps: Beginners Guide

- What is OpenAI API Key and Guide To Use It

- How to Learn AI For Free: 2024 Guide From the AI Experts

- What is Generative AI, ChatGPT, and DALL-E? Explained

FAQs

What is the RAG concept in AI?

RAG stands for Retrieval-Augmented Generation, an AI technique that enhances language models by combining retrieval-based systems with generative models. It uses a retrieval mechanism to fetch relevant external data, and a generative model to synthesize information based on the retrieved data.

What is RAG used for?

RAG is used to improve the quality of responses in AI applications by integrating external knowledge sources, such as databases or documents. This approach is commonly applied in tasks like question answering, chatbots, and content generation where up-to-date or domain-specific information is essential.

How does RAG work?

RAG works by retrieving relevant documents or information from an external source using a search engine or database and then feeding this information into a generative model (such as a language model). The model uses the retrieved data to generate accurate and context-aware responses.

What is the difference between RAG and LLM?

While both RAG and LLM (Large Language Models) use generative models, the key difference is that RAG incorporates an additional retrieval mechanism to fetch external data, whereas LLMs generate responses based solely on their internal training data. RAG improves performance by integrating real-time or domain-specific information, which LLMs might not have access to.

Is it easy to implement RAG?

One of the most appealing aspects of RAG is its simplicity in implementation. Developers can incorporate retrieval-augmented generation into their AI applications with as little as five lines of code, depending on the platform they’re using. This makes RAG a relatively low-cost and high-efficiency solution compared to other methods, such as retraining models with additional datasets.

Moreover, NVIDIA and other tech giants, such as AWS, Google, and Microsoft, are providing workflows and tools to help developers build RAG-powered applications easily. For example, NVIDIA offers an AI workflow that includes NeMo Retriever, a set of microservices designed to simplify the retrieval process. This toolkit makes RAG implementation accessible even to developers with limited AI expertise.