Table of Contents

The world of software development is evolving rapidly, and AI is at the forefront of this transformation. Developers today are leveraging advanced tools to automate tedious tasks, streamline workflows, and create innovative solutions. Among these tools, code-specific large language models (LLMs) like the Qwen Coder are proving to be game-changers. They’re not just simplifying coding processes but also opening doors to new possibilities in automation and innovation.

In this article, we explore how you can harness the power of AI to enhance productivity, reduce errors, and create impactful tools tailored to your needs. Whether you’re an experienced developer or just getting started, this guide will inspire you to reimagine how you build and optimize your projects using AI-driven solutions.

What is the Qwen2.5-Coder?

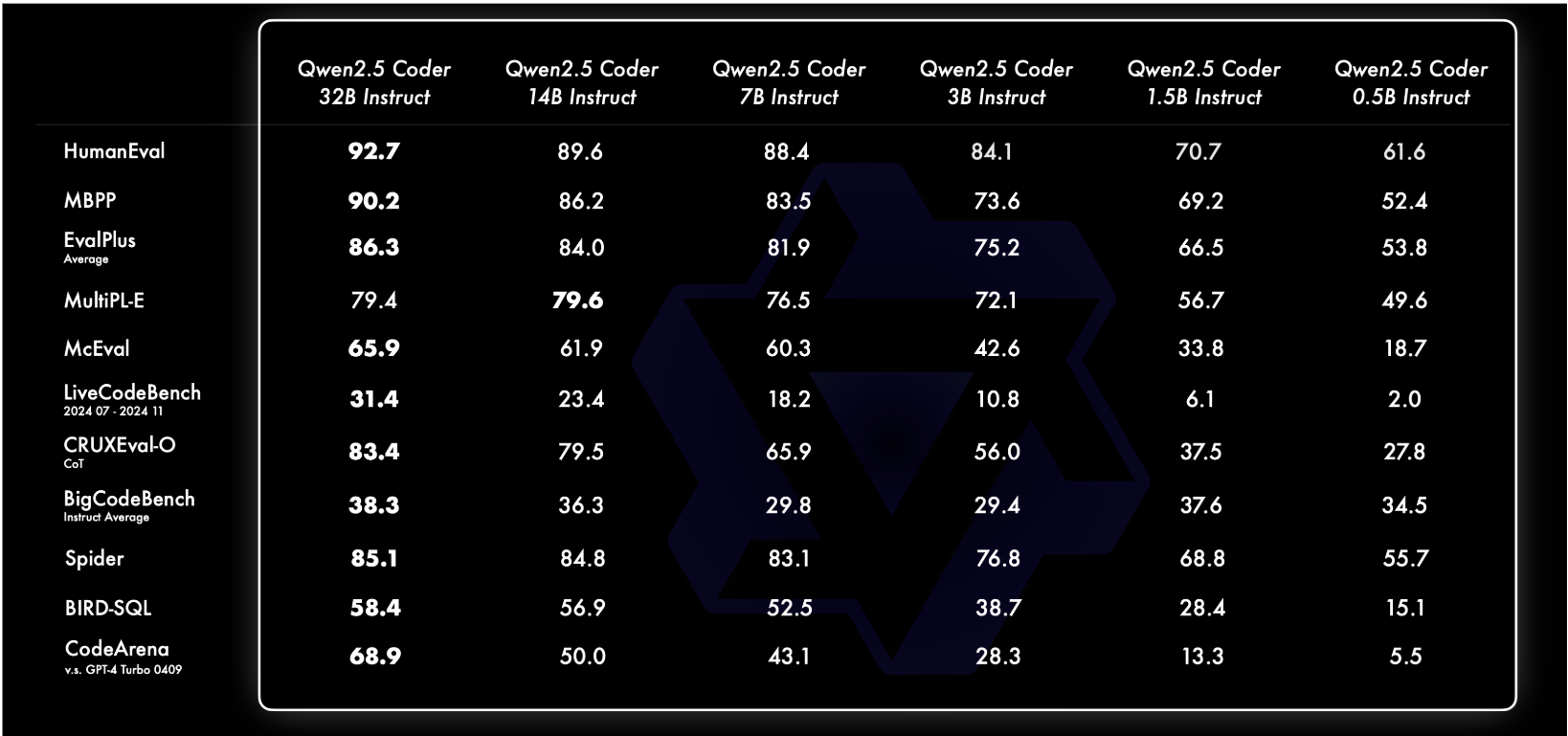

Qwen2.5-Coder is a specialized large language model (LLM) developed by Alibaba Cloud’s Qwen team, tailored specifically for coding tasks. It excels in code generation, reasoning, and fixing across more than 40 programming languages, making it a versatile tool for developers. The model is available in various sizes, including 0.5B, 1.5B, 3B, 7B, 14B, and 32B parameters, to accommodate different computational resources and requirements.

The 32B variant, in particular, has demonstrated performance comparable to proprietary models like GPT-4, achieving high scores on benchmarks such as McEval and MdEval. This makes Qwen2.5-Coder a powerful open-source alternative for developers seeking advanced code-related capabilities.

Key Features of Qwen2.5-Coder

Qwen2.5-Coder introduces cutting-edge capabilities that redefine the coding experience for developers across various domains.

Below is an updated overview of its standout features:

1. Code Generation

Qwen2.5-Coder excels at automatically generating code snippets based on natural language prompts. This streamlines the development process, saving time and enabling rapid prototyping for applications ranging from web development to advanced machine learning pipelines.

2. Code Completion

The model enhances productivity by completing partial code, and intelligently suggesting solutions that reduce errors and improve coding efficiency. Developers can quickly fill in missing sections of their code, allowing for seamless workflows.

3. Code Review Assistance

With its robust review capabilities, Qwen2.5-Coder detects syntax errors, enforces coding standards, and provides optimization suggestions. This feature ensures that code remains clean, maintainable, and performant.

4. Advanced Debugging

Qwen2.5-Coder’s debugging functionality identifies and resolves complex coding issues efficiently. By leveraging its advanced reasoning capabilities, developers can quickly pinpoint errors and apply effective fixes, significantly reducing debugging time.

5. Support for Multiple Programming Languages

This model is proficient across 40+ programming languages, including Haskell, Racket, Python, JavaScript, and more. Its multi-language capabilities ensure it meets the needs of developers working in diverse coding environments.

6. Multi-Language Code Repair

The model stands out for its exceptional code repair skills in multiple languages. It simplifies understanding and modifying unfamiliar languages, reducing the learning curve and enhancing accessibility for developers worldwide.

7. Long-Context Support

With the ability to handle up to 128K tokens, Qwen2.5-Coder effectively manages extensive codebases and documents. This long-context support is invaluable for analyzing large projects, documentation, or code-heavy repositories.

8. Diverse Model Sizes

Qwen2.5-Coder offers six distinct model sizes: 0.5B, 1.5B, 3B, 7B, 14B, and 32B parameters. These options cater to various computational resources and requirements, making the model adaptable for individual developers, small teams, and large organizations alike.

9. State-of-the-Art Performance

Qwen2.5-Coder-32B-Instruct has achieved state-of-the-art performance across popular benchmarks, including EvalPlus, LiveCodeBench, and BigCodeBench. It matches the capabilities of GPT-4o, making it one of the most powerful open-source coding models available.

10. Human Preference Alignment

The model aligns with human preferences through an internal benchmark, Code Arena. This ensures that Qwen2.5-Coder not only generates functional code but also aligns with best practices and developer expectations.

11. Practical Use Cases

Designed for real-world applications, the Qwen2.5-Coder is suitable for tasks such as code generation, repair, reasoning, and development assistance. It is a versatile tool that supports a wide range of development scenarios, from quick prototypes to large-scale enterprise projects.

These features collectively position Qwen2.5-Coder as a top-tier tool for developers, enabling innovation, efficiency, and reliability in modern software development. Whether you’re debugging, generating, or reviewing code, Qwen2.5-Coder ensures an optimized and seamless coding experience.

| Models | Params | Layers | Context Length | Token Limits | Ideal Use Cases |

|---|---|---|---|---|---|

| Qwen2.5-Coder-0.5B | 0.49B | 24 | 32K | ~65K Tokens | Lightweight tasks, quick prototype testing. |

| Qwen2.5-Coder-1.5B | 1.54B | 28 | 32K | ~100K Tokens | Small-scale applications, code autocompletion. |

| Qwen2.5-Coder-3B | 3.09B | 36 | 32K | ~150K Tokens | Medium-complexity tasks, lightweight inference. |

| Qwen2.5-Coder-7B | 7.61B | 28 | 128K | ~250K Tokens | Complex code generation, multi-language support. |

| Qwen2.5-Coder-14B | 14.7B | 48 | 128K | ~500K Tokens | Advanced applications, large-scale model tuning. |

| Qwen2.5-Coder-32B | 32.5B | 64 | 128K | ~1M Tokens | Enterprise-level applications, long-context tasks. |

To help you choose the right model for your needs, we’ve compiled a comprehensive comparison of the Qwen2.5-Coder series. Each model is designed to cater to specific requirements, balancing parameter size, context length, and token limits to ensure optimal performance.

Code Implementation

In this section, we’ll explore how to implement code completion using the Qwen2.5-Coder model. We will discuss setting up the environment, loading the model, and executing a code completion task.

If you need more information or context at any step, please refer to Qwen’s official documentation.

Environment Setup

Ensure you have installed Python 3.9 or higher. You’ll also need the transformers library version 4.37.0 or above, as it includes the necessary integrations for Qwen2.5 models.

In the directory you are working, create a requirements.txt file and copy this into the file:

torch

transformers==4.39.1

accelerate

safetensors

vllmNow you can easily install the required packages using a requirements.txt file:

pip install -r requirements.txtLoading the Model and Tokenizer

We’ll use the AutoModelForCausalLM and AutoTokenizer classes from the transformers library to load the Qwen2.5-Coder model. For this example, we’ll utilize the 7B parameter model, which offers a balance between performance and resource requirements.

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "Qwen/Qwen2.5-Coder-7B"

# Load the tokenizer

tokenizer = AutoTokenizer.from_pretrained(model_name)

# Load the model with device mapping for efficient computation

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto",

device_map="auto"

).eval()Preparing the Input Prompt

Let’s consider a scenario where we want the model to generate a Python function that calculates the factorial of a number. We’ll provide a prompt to guide the model:

prompt = "# Function to calculate factorial of a number\n"Tokenizing the Input

We need to convert the input prompt into tokens that the model can process.

# Tokenize the input prompt

input_tokens = tokenizer(prompt, return_tensors="pt").to(model.device)Generating the Code Completion

With the input tokens prepared, we can now generate the code completion. We’ll set a limit on the maximum number of new tokens to generate to control the length of the output.

# Generate code completion

output_tokens = model.generate(

input_tokens.input_ids,

max_new_tokens=100, # Adjust as needed

do_sample=False # Deterministic output

)Decoding and Displaying the Output

Finally, we’ll decode the generated tokens back into human-readable text and display the complete function.

# Decode the generated tokens to text

generated_code = tokenizer.decode(output_tokens[0], skip_special_tokens=True)

# Print the complete function

print(generated_code)Complete Code

Combining all the steps, here’s the complete code:

from transformers import AutoModelForCausalLM, AutoTokenizer

# Specify the model name

model_name = "Qwen/Qwen2.5-Coder-7B"

# Load the tokenizer and model

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto",

device_map="auto"

).eval()

# Define the input prompt

prompt = "# Function to calculate factorial of a number\n"

# Tokenize the input prompt

input_tokens = tokenizer(prompt, return_tensors="pt").to(model.device)

# Generate code completion

output_tokens = model.generate(

input_tokens.input_ids,

max_new_tokens=100,

do_sample=False

)

# Decode the generated tokens to text

generated_code = tokenizer.decode(output_tokens[0], skip_special_tokens=True)

# Print the complete function

print(generated_code)Expected Output

When you run the above code, you can expect an output similar to:

# Function to calculate factorial of a number

def factorial(n):

if n == 0:

return 1

else:

return n * factorial(n - 1)This demonstrates how Qwen2.5-Coder can assist in generating functional code snippets based on simple prompts, streamlining the development process.

Note: Ensure that your system has the necessary computational resources to handle the model, especially for larger variants like the 7B model. Adjust the

max_new_tokensparameter as needed to control the length of the generated code.For more detailed information and advanced usage, refer to the Qwen2.5-Coder GitHub repository.

Related Reading:

- Guide to Blackbox AI – Solve Your Coding Challenges With AI

- StarCoder: LLM for Code — A Comprehensive Guide

- How to Build a Code Review Assistant Using AI — A Comprehensive Guide

- AI in Software Development