Table of Contents

Think of AI infrastructure as the foundation that is required to build and manage AI applications. It is an integrated hardware and software environment with appropriate resources to support AI models from creation to deployment, and also meet the computational demands of running AI/ML algorithms.

In this article, we will learn about what AI infrastructure is, its key components, and also discuss how it is different from an IT infrastructure. We will also talk about the factors that contribute to creating a high-quality AI infrastructure and the challenges that come with it.

What is an AI Infrastructure?

Businesses and enterprises are quickly moving towards AI solutions for tasks like data analytics, predictive modeling, and automation. And to support these AI systems, there is a need for a complete environment—including the hardware and software components—to support artificial intelligence (AI) and machine learning (ML) workloads. Which is exactly what an AI infrastructure is for.

AI infrastructures are built to handle the high computational demands and high data processing demands of AI algorithms. To do this they have hardware that is capable of handling huge datasets, parallel processing, and scalable storage and software tools like different machine learning frameworks and data processing libraries (among other things).

The main purpose of an AI infrastructure is to provide a platform for AI developers to process and analyze enormous volumes of data efficiently to enable faster and more accurate decision-making.

Key Components of AI Infrastructure

While an AI infrastructure is very complicated and has many components, the key things that allow it to serve it purpose, are the following:

Compute Resources

AI relies on specialized hardware to handle the massive computational demands of machine learning and deep learning models.

- GPUs: Designed for parallel processing, GPUs are essential for tasks like image recognition, natural language processing (NLP), and training deep neural networks. They can perform thousands of calculations simultaneously, significantly speeding up model training.

- TPUs: Developed by Google, TPUs are custom-built for tensor operations, making them highly efficient for running large-scale ML models, particularly in cloud environments.

- CPUs: While not as fast as GPUs or TPUs for parallel tasks, CPUs are still used for general-purpose computing and smaller-scale ML workloads.

- Distributed Computing: For large-scale AI projects, distributed systems like clusters or cloud-based compute resources are used to split workloads across multiple machines, reducing training time and improving scalability.

Data Storage and Processing

AI systems depend on vast amounts of data, which must be stored, processed, and prepared for model training.

- Data Storage: Scalable storage solutions like data lakes (for raw, unstructured data) and data warehouses (for structured, processed data) are critical. These can be cloud-based (e.g., AWS S3, Google Cloud Storage) or on-premises.

- Data Processing Frameworks: Tools like Apache Hadoop and Apache Spark are used to clean, transform, and organize large datasets. These frameworks enable distributed processing, making it possible to handle terabytes or petabytes of data efficiently.

- Real-Time Data Processing: For applications like fraud detection or recommendation systems, stream processing tools (e.g., Apache Kafka, Apache Flink) are used to analyze data in real time.

Machine Learning Frameworks

ML frameworks provide the software environment needed to build, train, and deploy AI models.

- TensorFlow: Developed by Google, TensorFlow is one of the most popular frameworks for building and training deep learning models. It supports a wide range of applications, from computer vision to NLP.

- PyTorch: Known for its flexibility and ease of use, PyTorch is widely used in research and production. It integrates well with Python and is favored for dynamic computation graphs.

- Scikit-learn: A library for traditional machine learning algorithms, ideal for tasks like classification, regression, and clustering.

- Keras: A high-level API that runs on top of TensorFlow, simplifying the process of building and training neural networks.

These frameworks provide pre-built functions and tools for data handling, model training, and optimization, making it easier for developers to focus on solving problems rather than building everything from scratch.

AI Infrastructure vs. IT infrastructure

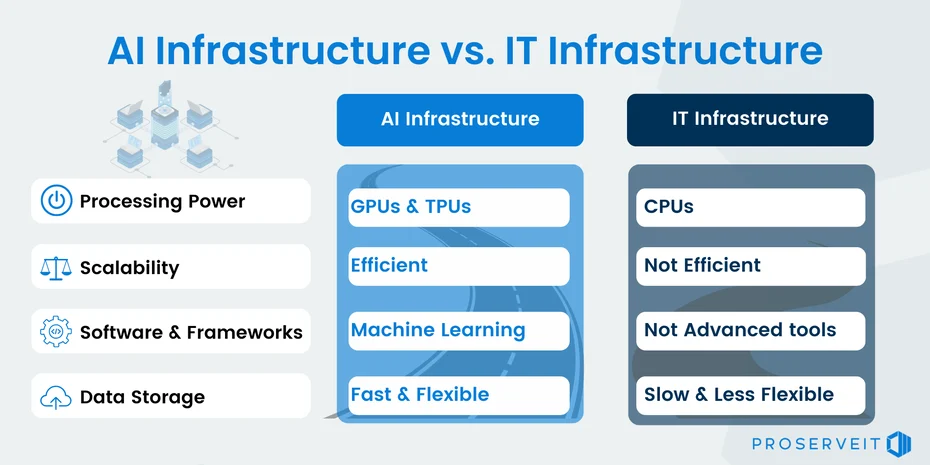

AI Infrastructure and IT Infrastructure are both an important part of modern digital systems, but they serve different purposes.

As we learned earlier, the main focus when designing an AI Infrastructure is on supporting the intensive computational requirements of AI and machine learning tasks. This is because high-performance hardware—like GPUs, TPUs, and specialized accelerators—is needed to process huge datasets and train complex models efficiently. Besides the hardware components, AI infrastructures also have dedicated software frameworks—like TensorFlow and PyTorch—and MLOps tools to manage the AI lifecycle, from training to deployment and monitoring.

On the other hand, an IT Infrastructure deals with the broader spectrum of hardware, software, networking, and storage systems that an organization needs to support its overall computing needs. This includes servers, databases, and networking equipment that run the other enterprise applications to manage data and ensure reliable connectivity.

So AI Infrastructure is a subset of IT Infrastructure, it is built and designed with a focus on parallel processing, high-speed data handling, and scalability, all of which are essential to efficiently run large-scale AI models.

The diagram above highlights the main differences between an AI and IT infrastructure over 4 main factors: processing power, scalability, software, and data storage.

Importance of AI Infrastructure

The importance of AI infrastructure cannot be overstated, because of its function as the foundation for developing, deploying, and maintaining increasingly complex AI models. As models grow in size (and complexity), the need for a robust infrastructure becomes increasingly important.

Not only is the training process of a model resource intensive, but the inference and prediction tasks also need significant resources to produce high-quality outputs within time. Having a high-quality AI infrastructure significantly reduces the time required for model training and inference by providing advanced computing resources like GPUs and TPUs. Having a GPU means that your AI system can take advantage of its parallel processing capabilities for experimentation and more frequent iteration. They say time is money and if you are a business or researcher who wants to innovate quickly to stay ahead of the competition, this speed is essential.

Another advantage of having a dedicated AI infrastructure is its scalability. Organizations can dynamically adjust computational resources depending on user demand to ensure that even as models expand, performance remains consistent. Whether using cloud-based services or on-premises clusters, scalable infrastructure allows companies to manage workloads efficiently without sacrificing speed or quality.

Reliability and security are also paramount. Dedicated AI infrastructure is designed with strict security protocols to protect sensitive data, ensuring that proprietary information and personal data remain safe. It also enhances system reliability by minimizing downtime and reducing the risk of failures, which is critical for applications in sectors like finance, healthcare, and autonomous systems.

Cost efficiency further underscores the value of robust AI infrastructure. By leveraging automation tools and cloud platforms, companies can optimize resource usage, significantly lowering the overall expenses associated with AI projects. This cost optimization not only makes cutting-edge research more accessible but also allows businesses to integrate AI-driven solutions without prohibitive upfront investments.

Challenges and Future Directions

The most obvious challenge that comes with building and maintaining an AI infrastsurtcure is the huge initial investment that is required to set up an AI infrastructure. High-performance devices like GPUs and TPUs are pretty expensive and even more so when you have to install them at enterprise level (can’t make do with just 1 or 2 GPUs). Not only that, organizations also need to invest in large-scale storage solutions and pay fees for cloud services, which provide backup and extra processing power.

Also, if you already have a fully functioning IT infrastructure, integrating these systems can be complex, demanding careful coordination between various components like data storage, networking, and computing clusters. Another big hurdle is the need for specialized expertise; developing, optimizing, and maintaining AI models requires professionals with advanced skills in machine learning, data engineering, and system architecture.

The main thing behind any AI system is the vast amounts of data that goes into training it and making predictions. As more and more businesses start integrating AI systems into their workflows, the protection of this (very often) sensitive data is becoming a major challenge. This makes it very important for organizations to have robust security measures in place—such as encryption, multi-factor authentication, and regular vulnerability assessments—to guard against cyber threats. Companies also need to continuously update their regulatory strategies to adapt to evolving privacy laws and industry standards. This involves monitoring legal developments, conducting compliance audits, and establishing secure data-handling policies. By proactively addressing both security and regulatory challenges, organizations can maintain the integrity of their AI systems and build trust with users and stakeholders.

Key Takeaways

- AI infrastructure is the integrated hardware and software environment designed to support the development, training, and deployment of AI/ML models, meeting their high computational and data processing demands.

- Key Components:

- Compute Resources: GPUs, TPUs, and distributed computing systems for handling parallel processing and large-scale workloads.

- Data Storage and Processing: Scalable solutions like data lakes, warehouses, and frameworks like Apache Spark for efficient data handling.

- Machine Learning Frameworks: Tools like TensorFlow, PyTorch, and Scikit-learn simplify model development and training.

- AI vs. IT Infrastructure: AI infrastructure is a specialized subset of IT infrastructure, focusing on high-performance computing, scalability, and real-time data processing for AI/ML tasks.

- Importance:

- Enables faster model training and inference through advanced hardware.

- Offers scalability to handle growing AI workloads.

- Ensures reliability, security, and cost efficiency for AI-driven solutions.

Related Reading:

- AI in Software Development

- How to Use AI — A Comprehensive Guide

- What is Responsible AI?

- 10 Best Machine Learning Libraries (With Examples)

- What is Generative AI, ChatGPT, and DALL-E? Explained

What is an AI infrastructure?

AI infrastructure comprises the hardware, software, networks, and tools necessary for building, training, deploying, and managing AI applications. It includes high-performance computing resources (like GPUs and TPUs), scalable data storage systems, robust networking, and specialized machine learning frameworks that collectively enable efficient development and operation of AI models.

What are the 4 types of AI systems?

A common classification of AI systems includes four types:

Reactive Machines: These are the most basic systems that can only react to current situations without using past experiences (e.g., IBM’s Deep Blue).

Limited Memory: These systems can store past data or experiences to make decisions, such as self-driving cars that learn from recent events.

Theory of Mind: This type refers to AI systems that are beginning to understand human emotions and beliefs, though true implementation is still in research.

Self-Aware AI: The most advanced form, which not only understands emotions but also possesses self-awareness; however, this remains largely theoretical at present.

What is the best infrastructure for AI?

The best AI infrastructure is one that is both scalable and efficient, often combining high-performance GPUs or TPUs, robust cloud services, and modern MLOps tools. This setup enables rapid model training and deployment while ensuring data security and operational reliability. Ultimately, the optimal solution depends on the specific requirements, budget, and scale of the intended application.

What is the AI infrastructure agency?

There isn’t a single, universally recognized “AI Infrastructure Agency.” Instead, various governmental bodies, industry groups, and regulatory organizations collaborate to establish standards, guidelines, and policies for AI infrastructure. These entities work to ensure that AI systems are developed and deployed securely and ethically, balancing innovation with accountability.